User-to-user, social media and online search platforms will have to implement systems to reduce the risk of their services being used in an illegal or harmful way under new provisions that come in to force this year.

The Online Safety Act 2023 (OSA), which sets out new laws aimed at protecting children and adults online, received Royal Assent on October 26, 2023. However 2025 will be the year that most of its provisions come into force. The OSA places new obligations and duties on user-to-user (including social media) and online search platforms, including requiring th§em to implement systems and processes to reduce the risks outlined above.

Although the OSA was enacted in 2023, many of its provisions are not yet in force and are awaiting guidance from Ofcom, the UK regulator of online safety. Ofcom has published a roadmap on the implementation of the OSA (which will be in phases) and set out its objectives for 2025.

Phase 1

Illegal harms

On December 16, 2024, Ofcom “[fired] the starting gun on the first set of duties for tech companies” by publishing its first OSA policy statement, which now requires in-scope services to assess the risk of illegal harms on their platforms by March 16, 2025. Such assessment must be “suitable and sufficient”, meaning that providers will need to understand the risks illegal harms pose to users on their service, and consider how best to tackle them. The risk assessment duties will depend on whether the service is a user-to-user service or a search service.

In respect of the risk assessment duties, user-to-user services will need to consider:

- the risk of illegal content being present or disseminated on the service;

- the risk of the service being used for the commission of a priority offence; and

- the risk of the service being used to facilitate a priority offence (for example content or behavior that is not necessarily illegal, but which facilitates a priority offence).

Providers of search services have an obligation to conduct a risk assessment considering, among other things, the risk of individuals encountering illegal content in their search content taking into account the way in which the search engine functions. Unlike user-to-user services, they will not have to consider the risk of the search service, or the search content that may be encountered on it, being used for the commission or facilitation of a priority offence.

Assuming Ofcom’s draft codes are approved (these are available on the policy statement page), from March 17, 2025, providers will need to protect users from illegal content and activity, in line with the OSA requirements and Ofcom codes and guidance.

Of particular importance will be service providers’ duties to prevent users from seeing certain illegal content, and to remove illegal content where it appears on the platform. “Illegal content” is defined in the OSA as “content that amounts to a relevant offence.”

The OSA sets out the relevant offences, which includes priority offences, and non-priority offences. These offences relate to existing criminal law, meaning that, before the OSA, they were generally relevant to offline acts only.

Of particular importance will be service providers’ duties to prevent users from seeing certain illegal content, and to remove illegal content where it appears on the platform.

Priority offences are the most serious offences and include offences relating to:

- terrorism;

- harassment, stalking, threats and abuse;

- coercive and controlling behavior;

- hate offences (for example hatred based on race, religion, sexual orientation etc);

- intimate image abuse (so-called “revenge porn”);

- extreme pornography;

- child sexual exploitation and abuse;

- sexual exploitation of adults;

- unlawful immigration;

- human trafficking;

- fraud and financial offences;

- proceeds of crime;

- assisting or encouraging suicide;

- drugs and psychoactive substances;

- weapons offences;

- foreign interference;

- animal welfare.

All service providers will need to take reasonable steps to prevent users encountering content that amounts to any of these offences.

Relevant non-priority offences are existing offences under UK law, which are not priority offences and which meet other certain criteria (for example, non-priority offences do not include offences created by the OSA, IP offences, or offences already dealt with by certain other regulatory regimes (such as safety of goods or consumer protection).) Under the OSA, service providers must take action to swiftly remove content which amounts to a relevant non-priority offence.

For details of all the steps required, Ofcom has set out a list of measures they are recommending for in-scope services. The document separates the actions by type of service (user-to-user or search service) and also by size of service. Further detail about illegal harms duties is available in Ofcom’s summary.

Ofcom has made clear that it will not hesitate to use its enforcement powers (which includes the ability to criminally prosecute) in the event of non-compliance. Enforcement powers given by the OSA include the power to fine up to the greater of £18m ($22m), or 10% of qualifying worldwide revenue, and the power to apply to courts for business disruption orders that could limit access to platforms in the UK.

Along with the policy statement, Ofcom also published its final Record-Keeping and Review Guidance and Online Safety Enforcement Guidance.

Phase 2

Child safety, pornography and the protection of women and girls

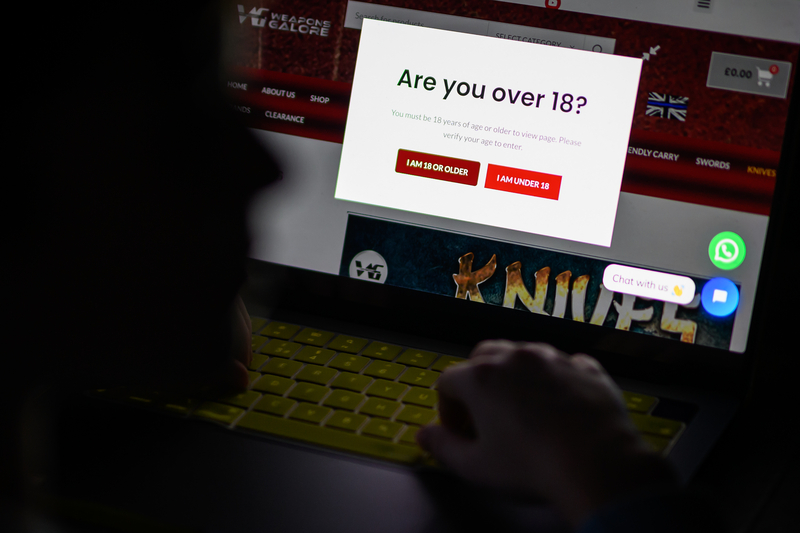

For Phase 2, Ofcom plans to issue final age assurance guidance for publishers of pornographic content in January 2025 (for example in relation to Part 5 of the OSA). The draft guidance is available on the Ofcom website. It is expected that the relevant duties for such publishers will come into force around the same time.

In addition, final children’s access assessments guidance will be published in January 2025, with providers being given three months to carry out the process. The process will involve services (a) determining whether it’s possible for children to access the service or a part of the service, and (b) if it is possible, determining whether the child user condition is met in relation to the service or a part of the service.

The “child user condition” will be met in relation to a service, or a part of a service, if there is a significant number of children who are users of the service or of that part of it, or the service, or that part of it, is of a kind likely to attract a significant number of users who are children. Service providers will only be entitled to conclude that it is not possible for children to access a service, or a part of it, if age verification or age estimation is used on the service with the result that children are not normally able to access the service or that part of it.

Services that are likely to be accessed by children must then carry out a children’s risk assessment within three months of Ofcom publishing its Protection of Children Codes and risk assessment guidance in April 2025. This means that such assessments should be completed by the end of July 2025, with child protection duties becoming enforceable around the same time.

Finally, in February 2025, Ofcom plans to publish its draft guidance on protecting women and girls. This will help platforms determine which content and activity disproportionately affects women and girls, and how such harms can be assessed and reduced.

Phase 3

Categorization and additional duties for categorized services

Certain user-to-user and search services will be categorized as Category 1, 2A or 2B services, and will be subject to additional duties. Such services will be determined based on thresholds set by secondary legislation. Phase 3 will focus only on the additional requirements that are applicable to these services.

Based on the thresholds set by the government, Ofcom plans to publish the register of categorized services in summer 2025. A few weeks after that, Ofcom will issue draft transparency notices, with final versions to follow soon after. Categorized service providers will be required to publish annual transparency reports based on the requirements in these notices.

The final steps for Phase 3 are likely to take place in early 2026. This includes publishing the draft proposals relating to the additional duties.

Important dates

- Required now: In-scope service providers must begin to assess the risk of illegal harms on their platforms, and consider how best to tackle them (illegal harms risk assessment) (Phase 1).

- January 2025: Final children’s access assessments guidance is expected to be published, meaning that in-scope providers must begin assessing whether their service is likely to be accessed by children (children’s access assessment) (Phase 2).

- January 2025: Part 5 final guidance is expected to be published (Phase 2).

- February 2025: Draft guidance on protecting women and girls is expected to be published (Phase 2).

- March 16, 2025: Illegal harms risk assessment must be completed (Phase 1).

- March 17, 2025: Providers will need to protect users from illegal content and activity from this date (Phase 1).

- April 2025: Protection of Children Codes and risk assessment guidance expected to be published, which will be relevant where a provider’s children’s access assessment concludes that the service is likely to be accessed by children. Such providers must begin completing a children’s risk assessment (children’s risk assessment) (Phase 2).

- July 2025: Children’s risk assessments must be completed, and the associated child protection duties will become enforceable around the same time (Phase 2).

- Summer 2025: Register of categorized services is expected to be published. Ofcom will then issue draft transparency notices, with final versions to follow soon after (Phase 3).

- 2026: Final implementation steps for Phase 3 are likely to take place in early 2026.

Comment

The OSA is a milestone piece of legislation for online service providers, and Ofcom has made it clear that it will not hesitate to use its new enforcement powers to tackle online harms. Ofcom’s Enforcement Director has confirmed that the regulator’s preferred approach to enforcement is a collaborative one – but businesses will need to act quickly in conducting adequate risk assessments that will enable them to maximise online safety for users.

Whilst the OSA became law in 2023, it is only in 2025 that we are likely to see enforcement begin as the various requirements come into force. It is therefore very important that in-scope services (and services that may be in scope) take action as soon as possible to determine what steps they need to take to comply.

Anna Soilleux-Mills is a commercial and regulatory partner in the Technology and Media team with a particular focus on the gambling, media and sport industries. Laura Bilinski is a senior associate in the Technology & Media Department and has particular experience advising clients in the gambling and online retail sectors.

Co-authored by Rachel Anderson, Trainee Solicitor at CMS.