“With the rapid evolution of powerful Al, we see the need for significant political attention.” That is the conclusion reached by a group of 12 members of the European Parliament (MEPs) in initiating a political call to action.

The move comes in the wake of an open call to hold AI developments for six months written by leading members of the global tech community.

Dragoș Tudorache, MEP Romania, one of the co-rapporteurs to the Artificial Intelligence Act and a signatory to the latest call, said: “AI is moving very fast and we need to move too. The call from the Future of Life Institute to pause the development of very powerful AI for half a year, although unnecessarily alarmist, is another signal we need to focus serious political attention on this issue”.

The Future of Life Institute letter sparked some controversy after some of the experts questioned the conclusions that had been reached while citing their research, and Tudorache’s comment about elements being “unnecessarily alarmist” reflect concerns about the focus on hypothetical threats that may emerge in the future rather than real harms identifiable today.

The AI Act, which the EU Parliament is currently working on, is a risk-based legislative tool which is meant to cover specific high-risk use-cases of Al, while simultaneously protecting the fundamental rights of people in accordance with European values. The MEP’s acknowledge that it will also be needed to set a complementary set of preliminary rules for development and deployment of General Purpose Al systems.

Global summit

Within the AI ACT framework, the MEP’s said that they are determined to provide a set of rules that are specifically tailored to foundation models that will steer developments in a direction that is human-centric, safe, and trustworthy.

They will also call on European Commission President Ursula von der Leyen and US President Joe Biden to summon a high-level global summit on AI, with the goal of agreeing a preliminary set of governing principles for development, control, and deployment of very powerful AI, and ask the principals of the Trade and Technology Council to set a preliminary agenda for the summit.

The MEP’s want democratic countries to reflect on potential systems of governance, oversight, co-creation, and support for developments. That includes companies and Al laboratories jointly striving for more sense of responsibility with developments, increasing efforts to make sure models are safe and trustworthy, and significantly increasing transparency with regulators.

Pause AI developments

The FLI has followed up the original open letter with a guidance document called Policymaking in the Pause. “Regardless of whether the labs will heed our call, this policy brief provides policymakers with concrete recommendations for how governments can manage AI risks,” it says.

“These systems may perform in ways that their developers had not anticipated or malfunction when placed in a different context. Without appropriate safeguards, these risks are likely to result in substantial harm, in both the near- and longer-term, to individuals, communities, and society.”

Policymaking in the Pause

The guidance includes some recommendations where the FLI wants to see establishment of laws and industry standards that clarify and declare ‘duty of loyalty’ and ‘duty of care’ when AI is used in the place of or in assistance to a human fiduciary.

The Policymaking in the Pause document recommends:

- Mandating robust third-party auditing and certification.

- Regulating access to computational power.

- Establishing capable AI agencies at the national level.

- Establishing liability for AI-caused harms.

- Introducing measures to prevent and track AI model leaks.

- Expanding technical AI safety research funding.

- Developing standards for identifying and managing AI-generated content and recommendations.

Even if the new generation of advanced AI systems already presents well-documented risks today, it can also manifest high-risk capabilities and biases that are not recognized immediately, the Institute warns.

“These systems may perform in ways that their developers had not anticipated or malfunction when placed in a different context. Without appropriate safeguards, these risks are likely to result in substantial harm, in both the near- and longer-term, to individuals, communities, and society.”

Italian ban

Lately, the rise of powerful AI tools like ChatGPT has raised many regulatory worries, with a growing concern over bias in powerful AI and machine-learning tools and possible violations of the EU’s GDPR privacy law.

With concerns that ChatGPT is collecting personal data unlawfully, and the lack of age verification for children, Italy became the first western country to temporarily ban ChatGPT in March.

The Italian Data Protection Authority Garante per la protezione dei dati personali made the decision, saying that “the lack of whatever age verification mechanism exposes children to receiving responses that are absolutely inappropriate to their age and awareness, even though the service is allegedly addressed to users aged above 13 according to OpenAI’s terms of service”.

“AI is moving very fast and we need to move too. The call from the Future of Life Institute to pause the development of very powerful AI for half a year, although unnecessarily alarmist, is another signal we need to focus serious political attention on this issue.”

Dragoș Tudorache, MEP Romania

Besides the ban, the authority has made an inquiry holding a big fine too, and given OpenAI – the creators of ChatGPT, until the end of April to comply with the demands.

The order highlights that no information is provided to users and subjects whose data are collected by ChatGPT, and that there appears to be no legal basis that underpins the collection and processing of personal data in order to ‘train’ the algorithms.

“OpenAI is not established in the EU, however it has designated a representative in the European Economic Area.” Garante has given the company 20 days to notify it of measures it has implemented to comply with the order, otherwise a fine up to €20m ($22m) or 4% of the total worldwide annual turnover may be imposed.

EDPB task force investigates ChatGPT

Meanwhile, the European Data Protection Board has initiated a task force to investigate ChatGPT. The EDPB states that the move is intended to “foster cooperation and to exchange information on possible enforcement actions conducted by data protection authorities”.

The Norwegian Data Protection Authority (NDPA) applauded the initiative. “Several of our sister bodies have open cases against ChatGPT, and this working group gives us the opportunity to coordinate this work so that we have a common, European approach,” said Tobias Judin, head of the international section of the NDPA.

The Norwegian supervisory authority has also received ‘a good number of inquiries’ regarding ChatGPT. However, it will not open its own case.

Countries that have blocked ChatGPT

According to digitaltrends, Russia, China, North Korea, Cuba, Iran, and Syria have all blocked ChatGPT.

Through different omissions, blocks, or bans; Afghanistan, Bhutan, Central African Republic, Chad, Eritrea, Eswatini, Iran, Libya, South Sudan, Sudan, Syria, and Yemen cannot use ChatGPT.

OpenAI’s list of countries that can use its API can be found here.

US AI task force

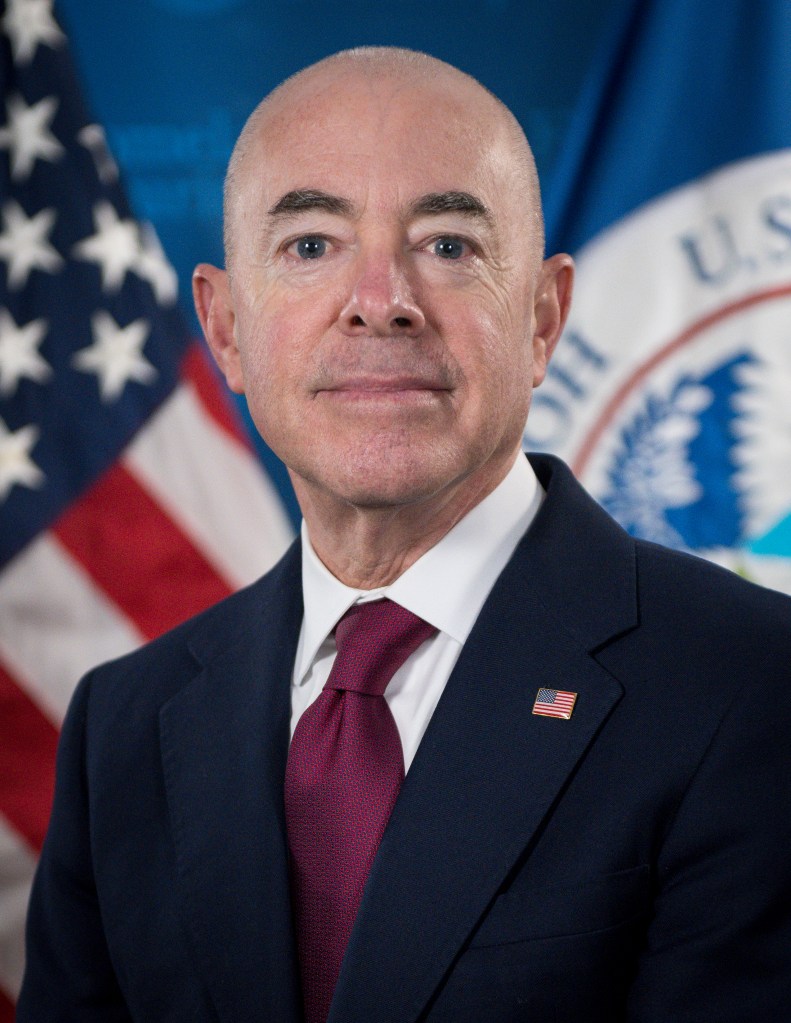

Earlier this week, the US Secretary of Homeland Security Alejandro N Mayorkas announced a new AI task force, dedicated to tackling AI challenges and the evolving threat landscape.

“We are, after all, confronting a dramatically changed environment compared to the one we faced in March 2003. One that could change even more dramatically, as AI grips our imaginations and accelerates into our lives in uncharted and basically unmanaged fashion,” Mayorkas said.

This is the Department first ever task force dedicated to AI, and it will drive specific applications of AI to advance critical homeland security missions by:

- integrating AI into supply chains and the broader trade environment, to screen cargo, to identify the importation of goods produced with forced labor, and manage risk;

- Using AI to counter the flow of fentanyl into the US. “We will explore using this technology to better detect fentanyl shipments, identify and interdict the flow of precursor chemicals around the world, and target for disruption key nodes in the criminal networks”;

- applying AI to digital forensic tools to help identify, locate, and rescue victims of online child sexual exploitation and abuse, and to identify and apprehend the perpetrators; and

- collaborating with partners in government, industry, and academia to assess the impact on secure critical infrastructure.

Global responsibility

The proposed EU AI Act was presented on April 21, 2022 by the European Commission. It will become law when both the Council (representing the 27 EU member states) and the European Parliament have agreed on a common version of the Act.

With the call to political action, the MEP’s stress that “the development of very powerful artificial intelligence demonstrates the need for attention and careful consideration”, and they want to see a global take on the progress. It was signed by:

- loan-Dragos Tudorache, Renew Europe, Co-rapporteur, Al Act

- Brando Benifei, S&D, Co-rapporteur, Al Act

- Axel Voss, EPP, Shadow rapporteur, Al Act

- Deirdre Clune, EPP, Shadow rapporteur, Al Act

- Petar Vitanov, S&D, Shadow rapporteur, Al Act

- Svenja Hahn, Renew Europe, Shadow rapporteur, Al Act

- Sergey Lagodinsky, The Greens, Shadow rapporteur, Al Act

- Kim van Sparrentak, The Greens, Shadow rapporteur, Al Act

- Kosma Zlotowski, ECR, Shadow rapporteur, Al Act

- Rob Rooken, ECR, Shadow rapporteur, Al Act

- Eva Maydell, EPP, Rapporteur of the ITRE opinion on the Al Act

- Marcel Kolaja, The Greens, Rapporteur of the CULT opinion on the Al Act