“We call on all AI labs to immediately pause for at least six months the training of AI systems more powerful than GPT-4.” That was the call made in an open letter, published by the Future of Life Institute.

The letter has been signed by leading figures in the tech world, including Elon Musk, Turing Prize winner and professor Yoshua Bengio, Berkeley professor Stuart Russell, Apple’s Steve Wozniak and Skype’s co-funder Jaan Tallinn. Within a day of publication, over 1,400 signatures had been added and the signatories list had been paused “due to high demand”.

The letter says that “Humanity can enjoy a flourishing future with AI”, but warns it can also pose big risks, as demonstrated by the use of ChatGPT in writing successful malware.

“Powerful AI systems should be developed only once we are confident that their effects will be positive and their risks will be manageable.”

Contemporary AI systems are more and more becoming human-competitive at common tasks, and the letter says this raises existential questions:

- “Should we let machines flood our information channels with propaganda and untruth?

- “Should we automate away all the jobs, including the fulfilling ones?

- “Should we develop nonhuman minds that might eventually outnumber, outsmart, obsolete and replace us?

- “Should we risk loss of control of our civilization?“

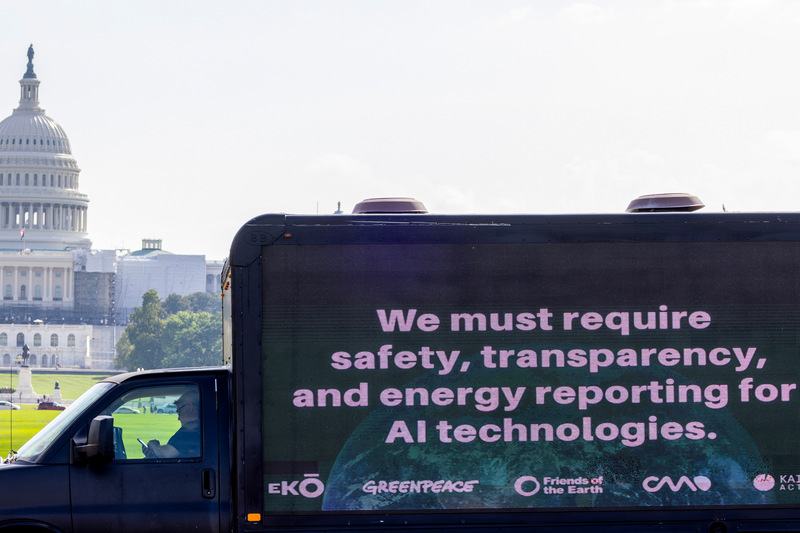

“AI research and development should be refocused on making today’s powerful, state-of-the-art systems more accurate, safe, interpretable, transparent, robust, aligned, trustworthy, and loyal”, the letter continued.

Core risks

Another core risk pillar is the current ‘out-of-control’ AI racing between companies to develop and the deploy more powerful tools. The letter says this is unsustainable, warning that the speed of developments are creating something no one will be able to understand, predict or have full control over.

That is why the letter urges a pause in development, and instead wants to see a focus on creating a shared safety protocol. This protocol should be audited by independent experts to ensure that “systems adhering to them are safe beyond a reasonable doubt”. The initiative wants the pause to be public and verifiable, and involve all key actors. And if the pause does not happen quickly enough, the letter urges governments to step in and institute a moratorium.

AI developers are also advised to collaborate with policymakers to speed up the development of a robust AI governance system. This should, at minimum, include:

- new and capable regulatory authorities dedicated to AI;

- oversight and tracking of highly capable AI systems and large pools of computational capability;

- provenance and watermarking systems to help distinguish real from synthetic and to track model leaks;

- a robust auditing and certification ecosystem;

- liability for AI-caused harm; robust public funding for technical AI safety research; and

- well-resourced institutions for coping with the dramatic economic and political disruptions (especially to democracy) that AI will cause.

“Powerful AI systems should be developed only once we are confident that their effects will be positive and their risks will be manageable”, the letter says.