The following provisions of the EU AI Act became applicable from February 2, 2025.

- AI literacy requirements; and

- The prohibition of certain AI practices (Chapter II).

The AI literacy requirement comes under Article 4 of the AI Act. The Act makes clear that AI literacy across users of AI and those who are the subject of AI systems will be key to enable benefits to be reaped, whilst ensuring fundamental rights, health and safety and democratic control.

OK, we hear you say, we get the picture, but specifically, what is the new obligation on companies? Essentially, it is a training obligation focused on those individuals who are dealing with AI systems on behalf of companies. The obligation applies to organizations that are developing, marketing or using AI systems in their business, so are likely to apply to your company if it is touching AI. (In the language of the AI Act, the obligation applies to “providers” and “deployers”).

To be compliant with the obligation, companies will need to demonstrate sufficient levels of AI literacy in their staff, meaning equipping staff and other operators of AI systems with the necessary skills, knowledge and understanding to operate those systems. What is necessary will depend on the context in which the AI system is being operated, so companies will need to look at both the individuals who are the subject of the AI systems and the existing knowledge of the staff being trained. Consequently an off-the-shelf training is unlikely to be effective without some tailoring to the business and the AI systems in question.

From experience in data protection compliance, we learn that often the “weakest link” will be the human operating the system, and so we should be training users of AI systems appropriately.

Prohibited practices

This section deals with the prohibition of certain AI practices and how this may affect your day-to-day use of AI.

Article 5 prohibits the placing on the market, the putting into service, or the use of AI systems for certain purposes described below. Some of these use cases may currently be deployed in areas of security, recruitment or employment.

What AI systems are prohibited?

Under Article 5, AI systems used for the following purposes are prohibited*:

- subliminal techniques or manipulative or deceptive techniques to distort someone’s behavior, causing them significant harm;

- exploiting vulnerabilities of individuals or groups due to protected characteristics (for example age, disability, etc), causing them significant harm;

- evaluation or classification of individuals or groups over a certain period of time for social scoring purposes, leading to detrimental or unfavorable treatment;

- assessing or predicting individuals’ risk of committing a criminal offence based solely on profiling or assessment of their personality traits and characteristics;

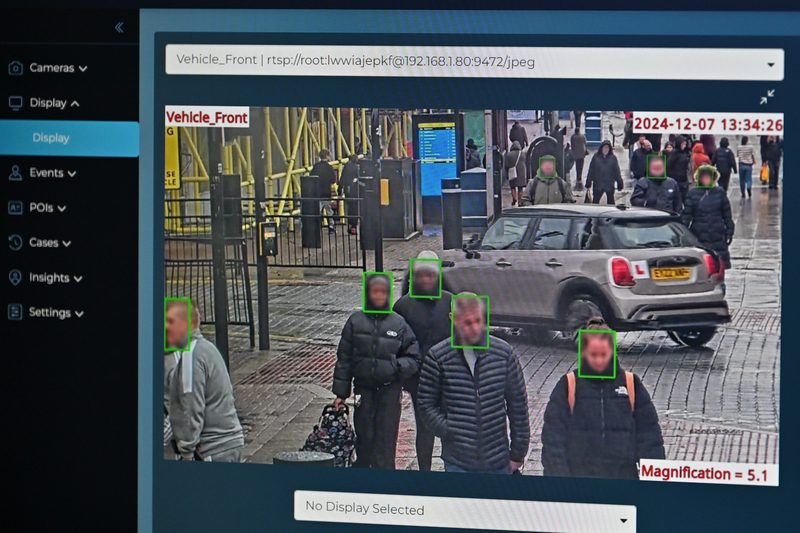

- systems to create or expand facial recognition databases through the untargeted scraping of facial images from the internet or CCTV footage;

- systems for inferring emotions of a natural person in the areas of workplace and education institutions;

- categorization of individuals based on their biometric data to deduce or infer their protected characteristics;

- “real-time” remote biometric identification systems in publicly accessible spaces by law enforcement (except in certain circumstances).

*The above is a high-level list. There are conditions for the application of the prohibitions, as well as exemptions to the prohibitions. For instance, “remote biometric identification systems” may be used for the purposes of law enforcement under certain circumstances and “emotion inferring systems” may be used for medical or safety purposes.

Who does it apply to?

The prohibition applies to all players involved in the development and deployment of the AI system, regardless of where they are located. So long as there is an EU “link”, the EU AI act is likely to apply, as the geographic scope of the Act is broad. For example, a provider of the system located outside of the EU selling it to EU customers (likely deployers) will be subject to the AI Act. Furthermore, providers and deployers not based in the EU, but where the output of the AI system is used in the EU, will also be caught.

Examples of uses cases which are prohibited:

- Emotion recognition systems in the context of employment / recruitment.

- Emotion recognition systems in education, for instance, in a recorded exam.

- Assessments of potential criminality of individuals in the context of recruitment based on their social media use.

- Scraping of images from CCTV footage to create an internal face database for security purposes (not law enforcement).

- Use of subliminal techniques causing individuals to make purchases beyond their means.

- Social scoring for the purposes of granting or denying a service based on publicly available information.

- Use of biometric categorization systems to deduce or infer protected characteristics such as race, religious beliefs, etc. for inclusion and diversity purposes.

Next steps

Watch out for the use cases listed above. Even if an exemption is available, it is likely that the relevant AI system will be considered high-risk.

As part of your AI governance efforts:

- Build into your AI risk assessment tools a consideration of whether AI use cases fall into the above categories.

- Educate your staff internally (especially those in HR/recruitment, marketing and security) so that AI systems for these use cases are not rolled out. Doing so dovetails with the AI literacy requirement under Article 4 of the EU AI Act.

- Build in due diligence questions within your vendor due diligence procedures to ensure that you are not inadvertently engaging vendors that use prohibited AI systems.

Even if you are not caught under the EU AI Act, it is important for your AI governance efforts to understand the reasons behind the prohibitions and to put policies and procedures in place to ensure that you use AI in a responsible way.

Hazel Grant is co-head of the Technology and Data group at Fieldfisher, specializing in data protection and information law. Leonie Power is a partner in the Technology and Data group in Fieldfisher’s London office, specializing in data protection and privacy law, as well as emerging AI and broader tech reg issues. Nuria Pastor is a UK based Director in the Data & Privacy team. She advises global organizations on data protection compliance matters and also provides strategic advice, including acting as DPO to some clients.