*The title was written by AI. The rest of this article was not.

There is a lot of talk about artificial intelligence (AI) and how it will disrupt, and is already disrupting, the marketing, advertising and communications services (or marcomms) sector or, for instance, the financial services (FS) sector, where trust in a person’s digital identify or ID is key. One of the most talked about issues is deepfakes.

Deepfakes are created by deep-learning AI technology to replicate convincing images, audio and videos. This technology is used to make “face swaps” in videos, or to create new images altogether. In the music industry, sound recordings, or vocal synthesisers, are being used as a basis to recreate the voices of famous artists.

Marketing and ad campaigns

For those looking to create marketing or ad campaigns in any sector, it will be important to consider what provisions to include in production and advertising agency services agreements to manage and control the use of AI or deepfake technologies in deliverables and creative content, whether for the FS or any other sector.

In addition, from a fraud prevention and risk management perspective, especially around the use of trust and ID verification and/or authentication mechanisms, it will be important to assess which of the two out of the three potential criteria are used, for instance, for the purposes of the applicable FCA Regulatory Technical Standards (RTS) and Strong Customer Authentication (SCA).

SCA means an authentication based on the use of two or more elements categorized as knowledge (something only the user knows), possession (something only the user possesses) and inherence (something the user is) that are independent, in that the breach of one does not compromise the reliability of the others and is designed, for example in such a way as to protect the confidentiality of the authentication data.

In the context of deepfakes, this has particular relevance, especially in relation to inherence, in that biometrics and how a person “looks” and “sounds” may not be as easy to authenticate or verify as previously thought. For example, fraud detection systems often use voice and tone of a customer to detect likelihood of fraud. If a fraudster is deploying a GenAI tool to mimic a voice, it is possible that they may even sound more convincing than the genuine person themselves. Adopting a culture of zero-trust therefore may be essential to ensuring that risk levels are kept to an acceptable standard when faced by more sophisticated deepfakes.

Three key issues

How AI is monitored and regulated is to be determined in the UK and across Europe, but there is certainly exciting progress to be made. The key message is that the deployment of AI in the marcomms sector is multi-faceted. It is important therefore to consider the relevant issues outlined below regarding image and brand protection, privacy, data protection and confidentiality, before investing in GenAI creative outputs.

Deepfakes and talent in media

Deepfakes are becoming prolific in the media; while some have embraced positive innovation with this new technology (such as footballer Lionel Messi’s ad campaign with Lay’s crisps, shown below), it has also drawn criticism (Rick Astley brought a case against Yung Gravy for imitating his voice in a version of his track Never Gonna Give You Up).

In the film industry, there is an equally complex relationship between creativity, innovation and AI. On one side, the recent movie Indiana Jones and the Dial of Destiny included a youthful (or reverse-aged) Harrison Ford for the first 25 minutes as a result of deploying AI technology, known as “FRAN” (face re-aging network). FRAN is Disney’s proprietary technology and, presumably, Ford’s talent contract will have included some innovative wording around ensuring that the studio had all necessary rights to employ such technology in relation to his personality rights.

On the other side, there is a serious concern for the future of aspiring actors being replaced by deepfake-generated imagery. This is one reason for the SAG-AFTRA strikes in the United States, with actors protesting against the “existential threat” to the creative profession as they continue to fight against the prospect of studios using AI to replicate their image.

Things seem to be going in a positive direction for the industry as the proposed WGA settlement looks to bring an end to the five months of strike action taken by Hollywood screenwriters. Top of the agenda was the threat of AI (such as ChatGPT) putting writers out of work but in the proposal, a summary of which can be accessed here, it is clear that that will not be happening any time soon. As well as various other restrictions, studio bosses have agreed to guarantee that AI cannot be used to write, or rewrite, scripts or other materials.

For an industry that thrives on creativity, and for artists and actors whose jobs and reputation are at stake, the threat of deepfakes is particularly concerning.

Elsewhere, in the music industry, Warner Music Central Europe recently awarded a record deal to Noonoouri, a digital influencer who has already seen success in the fashion world. Although her songs were written by human songwriters, who will presumably receive royalties as they would for an ordinary recording deal, Noonoouri’s voice was generated using voice-altering AI.

On the flip side, following a warning of “voice theft”, the French association Les Voix, as well as other organisations and unions in Spain, Italy, Germany, Austria and the United States which represent voiceover artists, signed up to the United Voice Artists’ “Don’t Steal Our Voices” manifesto in May. This calls for the “protection of the work of actors and human creativity as a whole”. In France, especially, the use of an individual’s image, name and voice has specific protections afforded by French privacy law, including in a commercial context (and, as such, privacy rights are in some respects even stronger than in other jurisdictions).

For an industry that thrives on creativity, and for artists and actors whose jobs and reputation are at stake, the threat of deepfakes is particularly concerning. Although there is currently no legislation or case law in England and Wales that explicitly regulates AI* (or at least which replicated China’s AI laws or the draft EU AI law), we discuss below some of the alternative approaches that are on offer.

(Note*: Existing laws may and do already apply to AI, if that was in any event – for example, discrimination and bias in employment law or separately, automated decision-making and profiling in the UK and EU GDPR).

Image and brand protection

In certain jurisdictions, such as the United States, image rights provide protection over an individual’s image, likeness or characteristics. In the EU, although the protection of image rights has not been harmonised, the European Court of Human Rights ruled in 2009 that the right to the protection of a person’s image is “one of the essential components of personal development and presupposes the right to control the use of that image“, and most Member States therefore recognize at least some form of legal protection based on a combination of IP rights (copyrights or ‘neighbouring rights’ in certain jurisdictions) and human/privacy rights.

For instance, in the Netherlands, the use of deepfakes can infringe upon the image rights of those involved. Generally, in a deepfake, a person’s face is recognizable and therefore qualifies as an image under Dutch copyright law (which is connected to the right to protection of personal life under the European Convention of Human Rights).

The Dutch Supreme Court has recognised the concept of ‘monetizable popularity’ among celebrities, meaning that they are not obligated to tolerate the commercial exploitation of their image without receiving compensation. Indeed, the publication of a deepfake featuring a celebrity in a prominent role could potentially be deemed to undermine or harm the way in which the portrayed person wishes to exploit their fame.

Similarly, in France, case law has extended the right to privacy under the French Civil Code to include a quasi-ownership right allowing an individual to commercialise their own image which may, subject to formal conditions designed to protect the individual, be assigned or licensed. There is even an economic and moral right component attached to a person’s image when it is infringed. The French legal regime protecting image rights therefore bears some similarity to the French copyright law regime. In addition, performers of phonograms and videograms such as singers or actors have their own proprietary and moral rights under the French Intellectual Property Code.

While deepfake technology uses machine learning technology to analyze and ‘train’ on existing content … to create new videos and sound recordings, it does not create direct copies.

In the UK, image rights are not recognised in their own right, although the Courts have sometimes recognised commercial misappropriation of a person’s publicity. Instead, we must look to alternative protection afforded under English law, such as IP rights.

Copyright, which protects a whole range of works including images, videos and sound recordings, initially springs to mind. However, while deepfake technology uses machine learning technology to analyze and ‘train’ on existing content as a basis to create new videos and sound recordings, it does not create direct copies. As a result, it’s much harder to substantiate a claim for copyright infringement. Although there are other IP issues, such as trade mark infringement and passing off that arise from using content as a means of AI training data, those are outside the scope of this article.

Performers’ rights are particularly relevant to deepfakes. In the UK, performers are granted two sets of rights, namely the right to (a) consent to the making of a performance; and (b) the right to control the subsequent use of such recordings, such as the right to make copies. Singers are granted these rights over their sound recordings, and actors over their performance.

However, commentators believe that AI-generated performance synthetization challenges the current UK framework because, as discussed, performances are reproduced without generating an actual ‘recording’ or a ‘copy’. Therefore, the resulting deepfake arguably falls outside the current scope of protection conferred to performances. Similarly, while moral rights offer various rights such as the right to object to false attribution, they are often waived in contracts and do not stop the generation of deepfakes in the first place.

Privacy, data protection and confidentiality

Image, voice and likeness are protected by data protection law, comprised in UK and EU GDPR. Before utilizing or processing content which may comprise personal data, developers and creatives should consider whether that processing may be carried out in line with the applicable lawful bases for processing personal data under UK or EU GDPR; or, in the absence of another applicable lawful basis, seek consent from the individual performer or talent (or data subject) and/or identify and rely upon a suitable, valid exception or derogation.

It is not uncommon for talent to rely upon the protection afforded by data protection, privacy and/or confidentiality (or misuse of private information) in addition to or in place of IP rights. Such rights may afford a strong safe harbor, as has historically been demonstrated in the case of Naomi Campbell – photographs of whom were taken whilst entering/leaving a certain medical clinic (note that special category data comprized in medical data is afforded special protection for obvious reasons) – or even under exclusive rights of contract granted to third parties. This was the case of the wedding photographs taken unlawfully at the reception of Michael Douglas and Catherine Zeta-Jones (who successfully won their claim against Hello! magazine after it released images of their special day without their consent).

What else do I need to know?

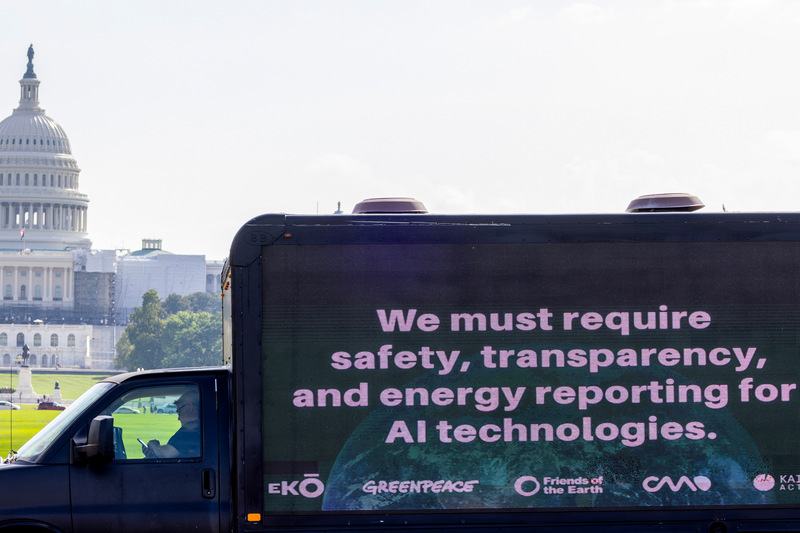

Amongst much talk of becoming an “AI leader”, the UK government is conscious of the need to act in this area and has recently produced an Interim Report: The Governance of Artificial Intelligence (published on August 31, 2023). This report specifically addresses what the government calls “The Misrepresentation Challenge” as it acknowledges the threat that deepfakes, and the use of image and voice recordings, pose to people’s reputations.

The good news is that progress is being made:

- The final text of the EU AI Act is being negotiated, which includes various obligations on providers of AI systems depending on the level of risk their technology may pose, including a transparency obligation to disclose the fact that material has been artificially generated or manipulated, which would provide individuals viewing deepfakes with the opportunity to make an informed choice and distance themselves from certain situations.

- In the United States, a blueprint for an AI Bill of Rights has been published setting out five principles for using AI, including that citizens must be informed when AI technology has been used.

- Leading organisations in the industry are also producing their own guidance; YouTube recently set out its guiding principles for partnering with the music industry.

- The Beijing Treaty, which the UK government intends to ratify and which is currently undergoing consultation, affords various rights to performers including the right to object to any distortion or modification of their performances that may prejudice their reputation.

However, for those at the heart of the marcomms sector whose rights and reputation are at stake, or industries such as financial services where trust in identity is key, guidelines and acknowledgment are not enough and it is clear that the law needs reform. The UK will host a global summit on AI safety at Bletchley Park in November this year, so watch this space.

Philip James, is a partner in the Global Privacy & Cyber Security Group. Indradeep Bhattacharya is a partner in the global IP practice specialising in patent and technology disputes. Eversheds Sutherland