A multinational company lost HK$200m ($26m) after scammers fooled an employee in Hong Kong with a fake group video call created using deepfake technology, the South China Morning Post reported on Sunday.

The scammers fabricated representations of the company’s chief financial officer and other people in the video call using public footage; they convinced the victim to make a total of 15 transfers to five Hong Kong bank accounts, the paper reported, citing the city’s police.

“(In the) multi-person video conference, it turns out that everyone [he saw] was fake,” senior police superintendent Baron Chan Shun-ching told the city’s public broadcaster RTHK.

The employee put aside his early doubts because other people in attendance on the video looked and sounded just like colleagues he recognized.

Chan said the employee had grown suspicious after he received a message that was purportedly from the company’s UK-based chief financial officer. Initially, the employee suspected it was a phishing email, as it talked of the need for a secret transaction to be carried out. But the employee put aside his early doubts because other people in attendance on the video looked and sounded just like colleagues he recognized, Chan said.

Police didn’t identify the firm or the employees.

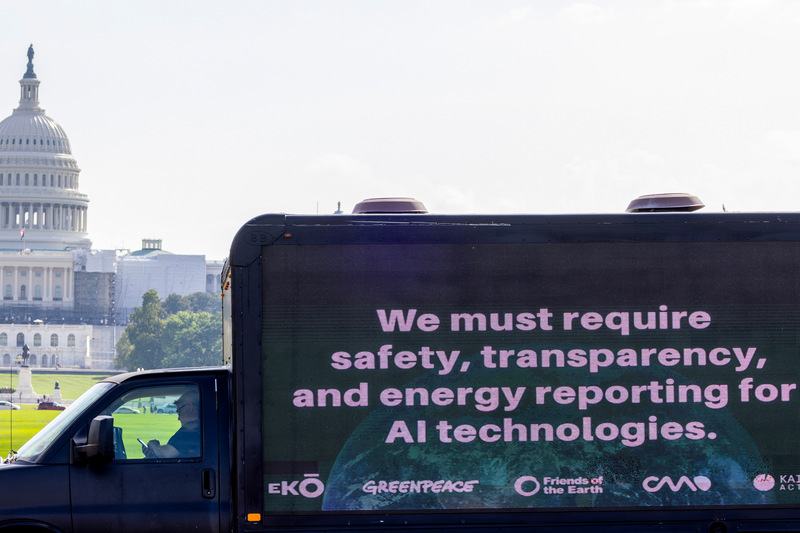

Deepfake consternation – lawmakers and others

Last year, President Joe Biden was motivated to take action by seeing realistic fake images of himself and his dog – he issued an executive order to address the risks associated with artificial intelligence.

The recently-signed agreement between Hollywood studio executives and the Screenwriters Actor’s Guild also featured the growing concerns of artists being replaced by deepfakes.

And late last month, Senate Majority Whip Dick Durbin (D-IL), Chair of the Senate Judiciary Committee; Senator Lindsey Graham (R-SC), Ranking Member of the Senate Judiciary Committee; and Senators Amy Klobuchar (D-MN) and Josh Hawley (R-MO) introduced the bipartisan Disrupt Explicit Forged Images and Non-Consensual Edits Act of 2024 (DEFIANCE Act), legislation that would hold accountable those responsible for the proliferation of nonconsensual, sexually-explicit “deepfake” images and videos.

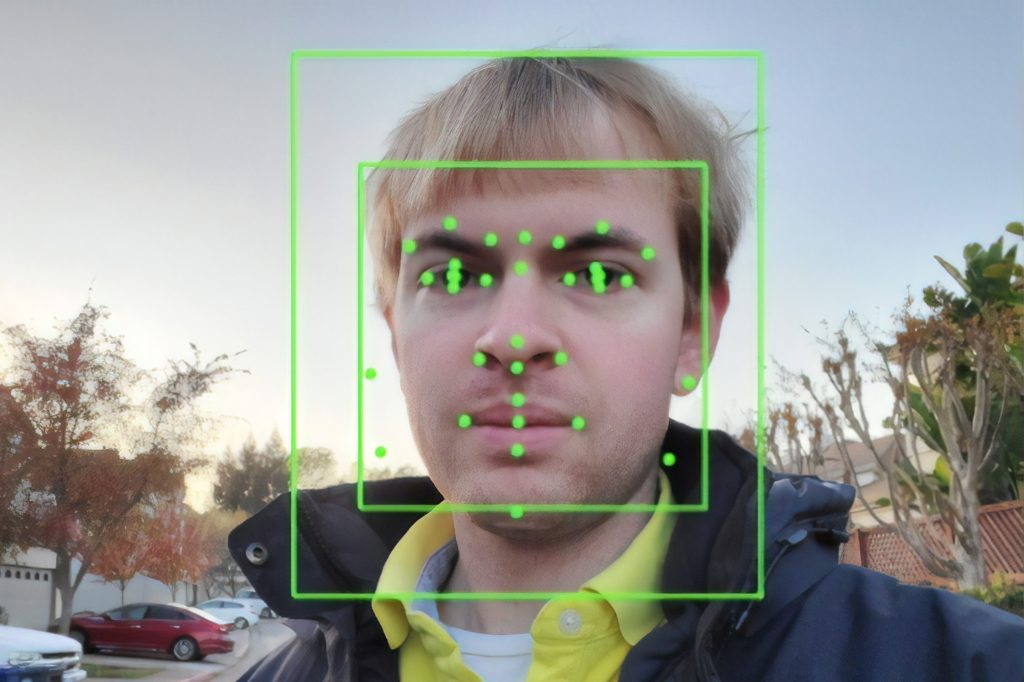

Training important

The word “deepfake” is a combination of “deep learning” and “fake”, and deepfakes are typically falsified pictures, videos, or audio recordings. Sometimes the people in them are computer-generated, fake identities that look and sound like they could be real people. But sometimes the people are real, but their images and voices are manipulated into doing and saying things they didn’t do or say.

The FBI warns that in the future deepfakes will have “more severe and widespread impact due to the sophistication level of the synthetic media used”.

The SANS Institute says each form of deepfake – still image, video, and audio – has its own set of flaws that can give it away, helping you avoid being duped. (To be sure, the increasing sophistication of bad actors producing and deploying AI to create deepfake videos and images is making this more challenging.)

Researchers at the Massachusetts Institute of Technology have developed a question list to help you figure out if a video is real, noting that deepfakes often can’t “fully represent the natural physics” of a scene or lighting.

Your business might not be equipped to hire a deepfake expert on staff, but everyone should learn the basics of identifying the fakes in corporate cybersecurity training and feel empowered to report such instances, so the company can promptly defend itself.