Regulation of social media platforms is a central topic of debate again in the wake of rioting in towns and cities across the UK that was sparked by misinformation and hate speech online.

The UK’s media regulator Ofcom is reported to be ramping up efforts to recruit staff to help with its online safety work under the new Online Safety Act. But questions are already being raised about whether the Act is fit for purpose, adding to doubts over where limiting harm ends and limiting free speech starts. And these are questions facing regulators and governments globally.

Ofcom told the Financial Times it currently has more than 460 people working on implementing the provisions of the Act, and plans to have 550 by next march. That would be over a third of the agency’s total 550 staff. But does the regulator face an almost impossible task?

X, Facebook, Signal, Telegram

London Mayor Sadiq Khan has already questioned whether the Act is “fit for purpose” after a wave of violence that has led to over 1,000 arrests so far was sparked by social media posts about the identity of the man who stabbed three children at a dance class in the northern town of Southport. So-called ‘influencers’ spread conspiracy theories and false information on mainstream social media channels such as X and Facebook, while more niche channels such as Signal and Telegram are known to have been used by far-right organisations to amplify those messages and organize violent gatherings.

Of the many problems facing the regulator, the sheer scale of the issue and the willingness of the platforms themselves to spread inflammatory material and falsehoods is perhaps the most challenging. X, formerly Twitter, has garnered much attention because of owner Elon Musk’s own role in reposting disinformation after stripping back the platform’s trust and safety teams.

The UK’s Online Safety Act describes itself as “a new set of laws that protects children and adults online”. Protecting children was given particular emphasis during the Act’s passage into law, with the stated aim being to “make the UK the safest place in the world to be a child online”. This, arguably, highlights one major issue, as “online” has no national boundaries.

New duties

The main thrust of the legislation is to put “a range of new duties on social media companies and search services, making them more responsible for their users’ safety on their platforms.” Fines for companies that fail to comply could be up to £18m ($23m) or 10% of qualifying worldwide revenue, whichever is the greatest.

In setting out its strategy for implementing the act, Ofcom has identified three phases.

- setting out codes and guidance on illegal harms duties – codes currently the subject of consultation with final decisions expected imminently;

- setting out detailed duties around the protection of children and of women and girls, particularly with regard to pornographic content;

- setting out guidelines on transparency, user empowerment and the duties to be imposed on certain categorized services.

It’s clear where the focus is, and Cabinet Minister Nick Thomas-Symonds agreed with Mayor Khan’s critique. But he quashed speculation that the new government could be reconsidering aspects of the Act, including reviving measures to tackle ‘legal but harmful’ content. The UK governments view is that social media companies need to take the lead, with a spokesperson for Prime Minister Kier Starmer saying: “We’re very clear that social media companies have a responsibility ensuring that there is no safe place for hatred and illegality on their platforms, and we will work very closely with them to ensure that that is the case.”

That stance is perhaps fuelled by understandable wariness about governments being seen to police free speech – Musk has already labelled Starmer “the biggest threat to free speech in the UK’s history”, while the Electronic Frontier Foundation has called the Act “a massive threat to online privacy, security, and speech.” It worries the Act undermines end-to-end encryption services, mandates a general monitoring of all user content, and opens the door to repressive measures by governments across the world.

Free speech

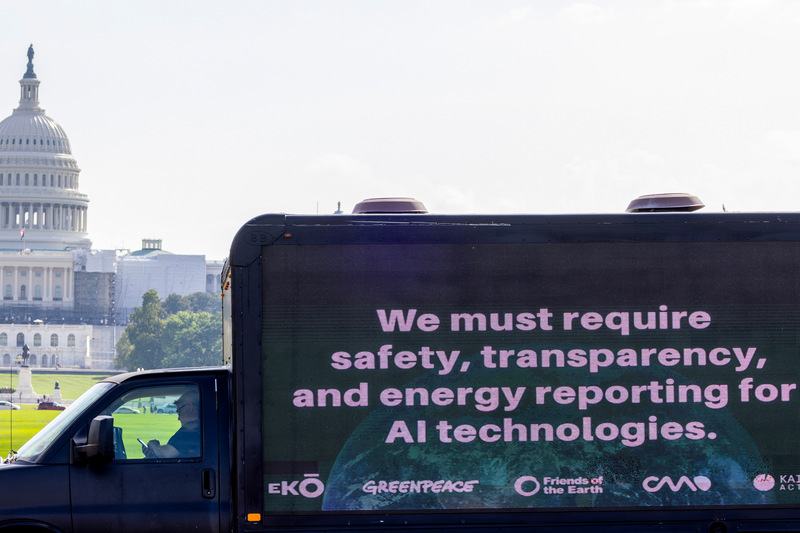

Measures such as the Online Safety Bill have traditionally been harder to implement in the US, where a more absolutist attitude to freedom of speech has held sway. But the attempted insuurection on January 6, 2021 – itself fuelled by material circulated on social media – prompted similar debate to that now occurring in the UK in the wake of the recent riots.

Writing for the US public policy research organization RAND, Luke J Matthews, Heather T Williams and Alexandra T Evans said “claims to free speech also have shielded actors that threaten democratic civil society” and “without some kind of government intervention, social media companies are unlikely to self-regulate effectively.”

One of the suggestions they make is that: “Just as banks are required to report currency transactions over $10,000, social media companies could be required to report, with original raw data, the content most amplified by their algorithms every week.” And their piece is a well-considered and much-needed examination of the costs and benefits of various regulatory option.

Without the active and willing participation of the platforms themselves it is hard to see any effective solution emerging – so the question is how far can and should governments go in encouraging them to be active and persuading them that being willing to help would be beneficial.

The conclusion reached by the RAND researchers is worth bearing in mind. “For evidence that the status quo isn’t working, and that social media isn’t getting better on its own, just look online.”