This is a transcript of the podcast LeighAnne Thompson shares a lawyer’s responsible approach to AI, a discussion between GRIP’s US Content Editor, Julie DiMauro, and LeighAnne Thompson, Associate Director of Seattle University Law School’s Technology, Innovation Law, and Ethics Program.

[INTRO]

Julie DiMauro: Greetings, everyone, and welcome to an intelligence and practice podcast. I am Julie DiMauro, US Content Manager in New York.

The podcast is being brought to you by the Global Relay Intelligence and Practice service that we call GRIP, a service that features in the daily website of articles on a variety of compliance and regulatory topics, plus podcasts and other deep dives into trends and best practices.

You can find the service at grip.globalrelay.com, and we hope you’ll connect with GRIP on LinkedIn.

Today’s podcast session is on the ever-evolving artificial intelligence landscape and some of the top challenges in this area for lawyers, in particular. And I’m quite pleased to say that an expert in this arena has joined us today, Leigh Ann Thompson, Associate Director of Seattle University Law School’s Law Library Digital Innovation, and the Associate Director for the University’s Technology, Innovation Law, and Ethics, or TILE program, and an Adjunct Professor at Seattle Law.

LeighAnne, can you tell us, please, a little bit about your current position, research areas, and background?

LeighAnne Thompson: Thanks, Julie, I appreciate that introduction. It’s a pleasure to be here. Yeah, as you mentioned, I wear several hats here at Seattle University School of Law. I actually joined the faculty back in 2016 as a law librarian and quickly moved into the space of the intersection of technology in legal practice and professional responsibility roles. So I’ve been teaching a class called Law, Practice, Technology, and Ethics since 2019, where we really focus on how lawyers are using technology in the practice of law.

And when I talk about technology, I am talking about everything from Microsoft Word to AI, to blockchain, eDiscovery, the whole gamut of how lawyers are using technology. And I’ve been saying for years that I think it’s impossible to teach technology without also teaching ethics and professional responsibility. And I also think it’s impossible to teach professional responsibility now without talking about technology and how lawyers are using it. So I’ve been very lucky to be here at an institution that is so innovative.

We are now actually a PR tech lab. So students who are taking professional responsibility can now take this one credit lab where they can get their hands dirty and actually touch the technology and explore how it intersects with professional responsibility. So yeah, that’s in a nutshell. I teach in those areas, but I’m also trying to find ways to innovate from the law library to really promote in technology and the way students are using it, but also really to find new ways to educate our future lawyers.

Julie DiMauro: Leigh-Ann thank you for that. And that’s very interesting that the artificial intelligence pedagogy has revolved around the professional responsibility of coursework, looking at the governance of AI primarily. That’s really interesting.

LeighAnne Thompson: Yeah, it is interesting. I taught a course this summer for the first time called AI and the practice of law. And the students were fairly surprised at how much using technology really does require them to look to the rules of professional conduct, but also broader ethical rules.

Julie DiMauro: Absolutely. So let’s get right to it and talk about how attorneys right now are using AI.

LeighAnne Thompson: Yeah, I think before we dive into that, it’s important to distinguish what we’re talking about when we talk about artificial intelligence. We have symbolic artificial intelligence or what some people call good old fashioned artificial intelligence versus generative artificial intelligence, which has really been the hype since chat GPT was released to the public.

Lawyers have been using symbolic AI for decades and they’ve been using it because it relies on logic and rules that can make decisions or predictions. They’ve been using it for things like document review, e-discovery. A lot of lawyers don’t even realize that symbolic AI is embedded in these big legal research platforms. And so if they’ve been using these big research platforms, they’ve been using AI.

But I think what the focus is on right now is more of generative AI. This is a different type of AI that can actually generate new content. And lawyers are using it for all types of things and I think it evolves every day, but I see lawyers using it for things like summarizing documents.

I was talking with a commercial litigator who says that he uses it for drafting jury selection questions. So, for example, if he’s going into a rural part of the US and doesn’t necessarily know the dialect well or how to best communicate with people in that area, he can take his questions and run it through ChatGPT or Claude or whatever platform and ask it to provide those questions in the local dialect. And that was, I thought, powerful.

We’re also seeing a lot of lawyers using it to get to our first draft or for creativity. Right before going into a client interview or before going into a negotiation. But what we’re seeing in the news, of course, is that lawyers are using these tools like ChatGPT for legal research and really getting themselves into trouble.

And so I think it’s important to know that, generative AI isn’t a magic wand that you can just wave over all of these legal tasks. We need to figure out what symbolic, the old fashioned AI is good at versus what generative AI is good at and really leverage those tools to figure out what the strengths are and how they can best be applied.

Julie DiMauro: And you had mentioned using AI and exploring it through the professional responsibility lens. How are you specifically using it in your legal work, LeighAnne?

LeighAnne Thompson: Yeah, in my own legal work, of course I’ve been using symbolic AI for years for things like legal research and eDiscovery. But with the advent of GenAI, I am trying to use it in as many ways as I can, more for an experimentation than anything else. So for example, if I do a big legal research project, I will then also run that same query through different generative AI tools to see the difference.

And that’s been interesting. And what I have found is that there are still a lot of hallucinations or made up facts or cases, and it still needs a lot of oversight. That said, it’s improving every day and I think it will get better over time.

What I’m really using it for right now and have found it to be extremely powerful at is summarizing documents. So for example, I wanted to have a chart that summarized all of the ethics opinions from the ABA and state and local bars that dealt with generative AI. And I was able to upload all of those, let’s say 20 opinions and ask Chat GPT to summarize it in a chart for me.

And it did it beautifully. What it did is it laid out this chart by jurisdiction and then it had the five rules of professional conduct that were most frequently addressed and put a nice check mark in each box so that I could see which rules each opinion addressed. And all of that happened in under five minutes.

Julie DiMauro: So a lot of information that saved you a lot of research time.

LeighAnne Thompson: Yes, it saved me a lot of reading time to be honest, as well as drafting time so that then I could spend my time quickly reviewing the documents to make sure that the output was accurate, that the chart accurately reflected what was in the opinion.

Julie DiMauro: Absolutely. Now you talked about hallucinations. Can you talk a little bit about the limitations of AI that you’re concerned maybe people don’t fully appreciate, maybe especially lawyers?

LeighAnne Thompson: Yeah, I think symbolic AI and generative AI have different limitations. Symbolic AI, I think it’s important that lawyers understand the bias in the underlying data sets and the algorithms that are being used to make those predictions or run the logic in the rules.

But with generative AI, I think there are some limitations that lawyers particularly may not understand. The biggest one being that generative AI tools are not search retrieval tools. I see a lot of students and lawyers treating these tools like they would a database, trying to get information out of it. Rather than understanding that instead these are statistical predictors based on linguistics.

These tools predict the next word statistically. It is not a search and retrieval tool. And I think if lawyers don’t have that very basic understanding, that’s where we get in trouble with using these tools for things like legal research and not understanding why the hallucinations happen.

I also think that lawyers need to be aware of confidentiality and privacy issues with these tools. Understanding that if you enter something into a prompt, you need to understand how that prompt will be used, how that prompt will be transmitted or encrypted or not encrypted, where it will be stored.

These are questions that we should all be asking before we use these tools and being very aware of not inadvertently disclosing confidential information. And then of course, I really think that lawyers need to think about using these tools in the framework of the rules of professional conduct.

I always frame my classes framework of Rule 1.1, which is Competence, Comment 8, right? That says lawyers should stay abreast of technology and the benefits and risks. So we really focus on the benefits versus the risks for each of the types of technology that we cover in my courses.

Julie DiMauro: And along with hallucinations, there’s been obviously some instances and discussion of bias and the spread of misinformation. Are those some of the things that keep you up at night? And in thinking about that, how important is it to keep a human in the loop – and what does that really mean in this situation?

LeighAnne Thompson: Yeah, the spread of misinformation really does keep me up at night. And that’s because these tools are so accessible and easy to use. And the line between what’s real and what’s fake has been completely blurred. And it’s very difficult to tell what’s real and what’s not. And without a regulatory framework, I feel like we’re going to have a lot of instances where misinformation gets to the courts, for example, fabricated evidence.

And how is a judge or a jury supposed to decide what’s real versus what’s not, when these tools are making very sophisticated, realistic outputs that are not indeed real? And then I think the second part of your question was, is keeping a human in the loop necessary or the answer for this?

Julie DiMauro: Yes, is it the answer for it? And what does that look like, human in the loop? Is that double checking all of the output, doing some extra research on the output? Is it coming up with better prompts over time? What does that look like?

LeighAnne Thompson: Yeah, I think having a human in the loop is absolutely crucial for many reasons. I think it will change over time. I think that what I have seen is that we’re moving towards using some smaller language models that will do some of the double checking and setting up guardrails around the outputs, but that’s not enough, right? We need humans who are constantly supervising and keeping the human element there and making sure that the benefits outweigh the harms.

I get really concerned when I think about these tools being used to determine individual freedoms, things like sentencing or child custody decisions. I don’t think we should be relying on these tools to make those types of decisions without a human in the loop.

I think there’s more than that though. I think that we need to be having more collaboration around the design and deployment of these tools. And what I mean by that is I think sometimes there’s a race to market where engineers are designing these tools and putting them out for use. And again, they’re very accessible.

They’re very easy to use. But I think what we need are the ethicists and the lawyers at the table during that design and deployment to make sure that we do have ethical frameworks around these tools.

Julie DiMauro: I can see the need for it. But while we’re talking about the promise and the peril of AI, what are your thoughts, Leigh Ann, about whether or not the promise outweighs the perils? Is it too early to make that call?

LeighAnne Thompson: That is such a complex question. I personally think it’s too early to make that call only because I don’t think we fully understand what the perils are. I think without the transparency of knowing what the harms are that could be done from these technologies it’s just too early to tell.

Julie DiMauro: That makes sense. Let’s focus on maybe the promise, the benefits. What are the most compelling benefits that you see AI bringing to the legal profession?

LeighAnne Thompson: Yeah, I think we’ve already seen so many compelling benefits in terms of streamlining workflows and reducing the time spent on some of those menial tasks that junior attorneys have traditionally been tasked with.

I sometimes age myself when I talk about mergers and acquisitions 20 years ago and having to go out to a target company and spend weeks in a basement going through files, manually going through files and looking for language. Now that’s all done through AI and it’s done a lot more efficiently.

I’m certain a lot more accurately in most cases. I think we’re gonna continue to see the legal profession look to these tools for tasks that can be automated. Client intake, for example, document review, eDiscovery, again, getting to a first draft. And so I do think that there is a lot of promise there and then lawyers can spend their time on the more creative tasks and the relationship building with clients.

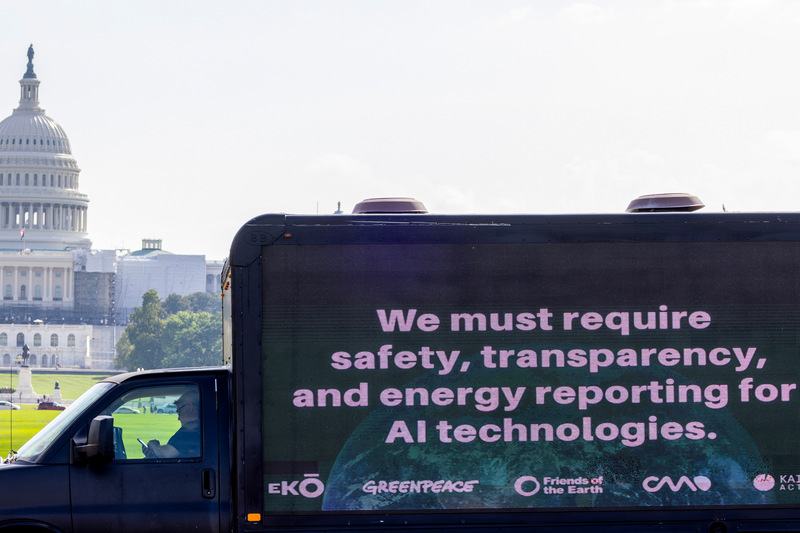

Julie DiMauro: While we’re talking about the functional capabilities of AI, and as we discuss the guardrails that need to be built around it, I do want t\o touch on the energy consumption that’s involved here.

There’s that aspect of are we using too much energy to deploy them to create the technology and to advance the technology. Where we are today? What are your thoughts on that?

LeighAnne Thompson: Yeah, I think that that definitely has to be part of our conversation whenever we talk about the ethics of using generative AI. These large language models use a lot of computational resources, meaning a lot of energy. But as I mentioned a few minutes ago, I think what we will see is that we will start using smaller language models that are more task specific.

So, originally trained on that same big dataset that a large language model was trained on for the linguistics piece of it, but then really bringing that down to a smaller level that can use a lot less energy and also be used locally on a device instead of in the cloud.

That said, I think these are important considerations for lawyers when they’re using these tools. I have some students who refuse to use ChatGPT for this very reason. They say, I’m not going to contribute to the harm to our earth. so I think we need to be asking these questions.

I met with legal vendor recently and I asked them, how much energy consumption are your tools using? And they weren’t able to answer that. And so I think we need to keep prodding so that we have the information we need to make informed decisions about the use of these tools.

Julie DiMauro: Turning toward some predictions now for compliance of legal professionals, how do you they’ll be using AI tools, maybe five years from now?

LeighAnne Thompson: Oh, Julie, such a great question. And it’s so hard to predict because one year ago, I could have never predicted how far we have come in one year. These tools are rapidly developing. But what I hope is that in five years, these tools will be used as a human computer interaction. So really as assistance for attorneys.

I think what we’ll see is right now, we’ve got these tools that can be used for certain tasks, but there’s a movement towards what’s called agentic AI, where instead of just performing a task and getting an output, they’ll be able to, these agents will talk to each other to complete more complex tasks. What do I mean by that? An example is, the other night on ChatGPT, I asked it to help me come up with an itinerary for a seven-day trip to Portugal.

I got the itinerary, I got hotel recommendations, restaurant recommendations, but it didn’t actually book the trip for me. The difference is with agentic AI, it would be able to, the agents would be able to actually perform those tasks. So basically, agentic AI has the ability to plan, execute, and then also reflect and learn on that.

Julie DiMauro: There’s a lot of excitement surrounds generative AI, in particular, are there any misperceptions you think people, especially within the legal profession, have about these tools?

LeighAnne Thompson: I think the biggest misperceptions are that these are search and retrieval tools that are giving back valid search results rather than just being statistical predictors. I also think that there is over-reliance on these tools. The outputs sound very confident, even when they’re wrong. And so I see a lot of lawyers taking the outputs at their word, right, and relying on them and not realizing that everything has to be and double-checked.

Julie DiMauro: So lawyers should approach generative AI differently as compared to other AI applications, because there’s even more risk the large-language models use a lot of data and they spout out a lot of data. You would think that the risk involved is different and the approach should be different.

LeighAnne Thompson: Absolutely, yes. Because generative AI, again, is a statistical predictor based on language, attorneys need to understand that, and then they need to understand how using these tools in the practice of law aligns with the rules of professional conduct, right? And when I talk to my students, I always talk about the five or six buckets: competence, diligence, communication with clients. Do we need to talk to our clients about how we’re using these tools?

Candor, right, candor to the tribunal and to other parties. Being honest about when we’re using these tools. Supervision is a huge one that I focus on all the time. Really, competence and supervision are the biggest ones along with confidentiality, making sure that we are safeguarding our clients’ information.

Julie DiMauro: Absolutely. Now, I’m practicing law at a law firm and I’m trying to convince my law firm to spend the money that AI requires. How do I get them to come on board with regard to resources and just the idea of bringing in AI that has been the source of some controversy?

LeighAnne Thompson: That’s a really good question, too. And I have heard from a number of practitioners that they’re kind of all over the map. Some firms have really embraced AI and they want the associates using it and others have completely banned it.

So again, I think it boils down to education. A lot of times, in order for the legal profession to adopt new technology, it takes getting your hands dirty and seeing what the technology can do. And that’s really what I try to encourage my students to do, is dive in, get your hands dirty, see what it’s capable of, and then think about ethical adoption of the tool.

Julie DiMauro: Absolutely makes sense. Now, thinking about you with your legal educator’s hat on, do you see AI playing a role in education that becomes broader over time? Could we reach a point where AI plays a central role in teaching future lawyers or is that a step too far?

LeighAnne Thompson: That’s a great question, too. And I think a lot of universities and colleges across the country are dealing with this right now and trying to figure out what is the role of AI in education.

My hope for law schools is that educating lawyers about AI becomes part of the curriculum, part of the mandatory curriculum, so that it really does impact the education.

What I’m seeing a lot of law schools doing right now and what Seattle University is doing right now is trying to create these multidisciplinary educational opportunities where our students not only learn about the law of technology, they actually get to learn how the technology works alongside computer science students and learn how these tools can be developed to solve actual access to justice problems. And that’s where I would love to see AI being a central part of legal education.

This summer, in my AI in the practice of law class, we brought in computer science students and all of the law students were able to identify an actual access to justice issue and work alongside the computer science students to come up with a prototype to solve that problem.

But the students worked together to not only build the prototype, but to talk about all of the ethical issues, the harms, the perils that might outweigh the benefits of those tools. So I think the more we can expose law students and future lawyers to experiences like that, the more value that they’re going to bring to the profession in terms of being able to ethically adopt some of these tools in practice.

Julie DiMauro: Thanks so much for being a part of our GRIP podcast today, LeighAnne Thompson.

LeighAnne Thompson: Oh, well, thank you for having me. I do appreciate it.

Julie DiMauro: We just had LeighAnne Thompson from Seattle University Law School join us and share her incredibly helpful insights with us on the use of AI in the legal education field and the legal field in general. Thanks to all of our listeners for tuning into this GRIP podcast.

Don’t forget to check us out at grip.globalrelay.com, and we’ll see you here for another podcast program soon.