The US Treasury’s Financial Crimes Enforcement Network (FinCEN) issued an alert to help financial institutions identify fraud schemes associated with the use of deepfake media created with generative artificial intelligence (GenAI) tools.

The alert explains typologies associated with these schemes, provides red flag indicators to assist with identifying and reporting related suspicious activity, and reminds financial institutions of their reporting requirements under the Bank Secrecy Act (BSA), particularly in light of the challenges that can arise from the use of AI.

Deepfake media

FinCEN said it has observed an increase in suspicious activity reporting by financial institutions in the last two years, with companies describing the suspected use of much media in fraud schemes targeting themselves and customers.

In these schemes, the institutions say criminals alter or create fraudulent identity documents to circumvent identity verification and authentication methods.

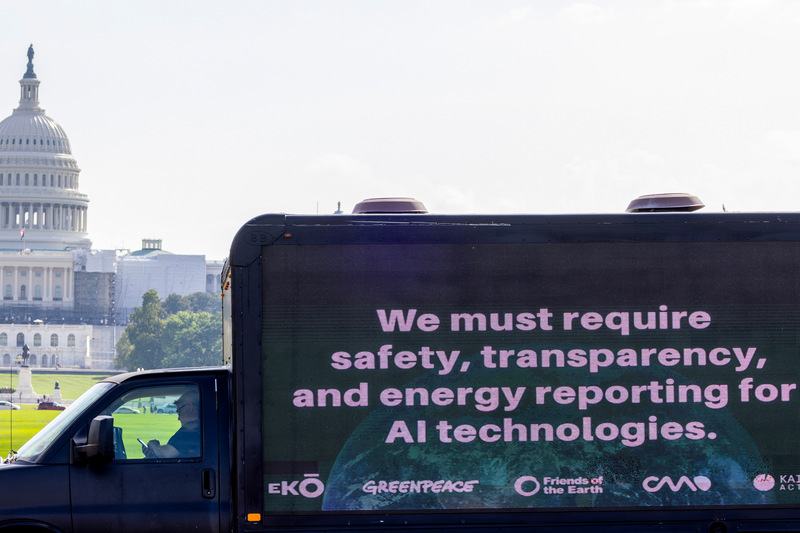

Thanks to GenAI-rendered content being more realistic than ever, the deepfake content appear to be real people and events, such as a person saying something they did not actually say. FinCEN says that “developers and companies producing GenAI tools have committed to implementing oversight and controls intended to mitigate malicious deepfakes and other muses, but criminals have found methods to circumvent them.

And open-source tools allow any user to access and modify the tools’ code and potentially circumvent controls.

Deepfake identities

FinCEN’s analysis of BSA data indicates that criminals have used GenAI to create falsified documents, photographs, and videos to circumvent financial institutions’ customer identification and verification and customer due diligence controls.

For example, some financial institutions have reported that criminals employed GenAI to alter or generate images used for identification documents, such as driver’s licenses or passport cards and books.

Criminals can create these deepfake images by modifying an authentic source image or creating a synthetic image. Criminals have also combined GenAI images with stolen personal identifiable information (PII) or entirely fake PII to create synthetic identities.

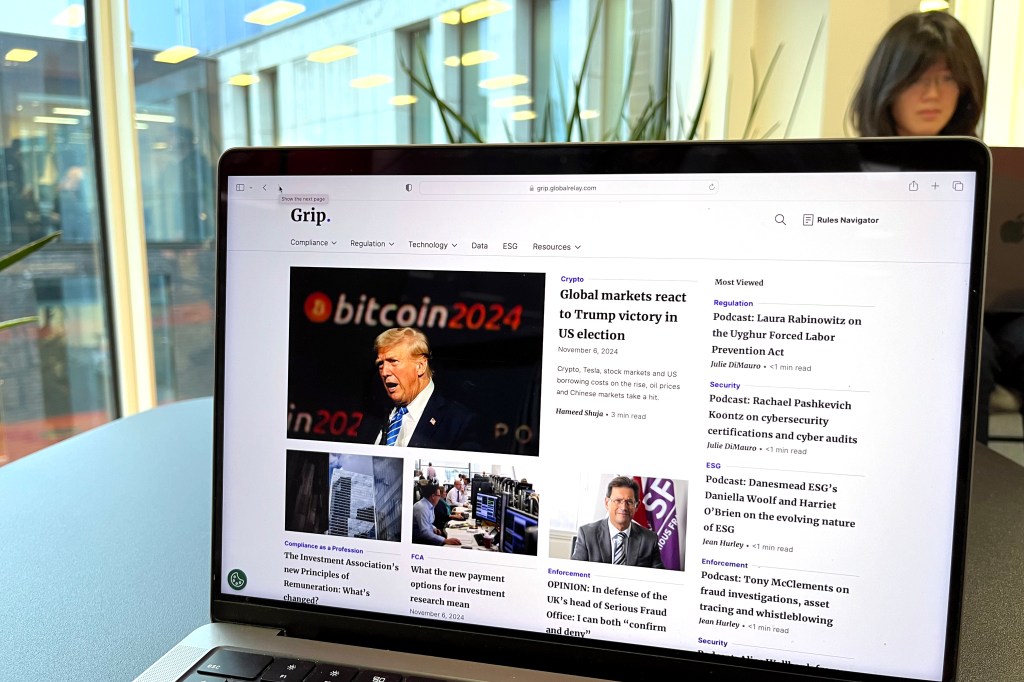

FinCEN analysis of BSA data also shows that malicious actors have successfully opened accounts using fraudulent identities suspected to have been produced with GenAI and used those accounts to receive and launder the proceeds of other fraud schemes. These fraud schemes include online scams and consumer fraud such as check fraud, credit card fraud, authorized push payment fraud, loan fraud, or unemployment fraud.

Detection and mitigation

FinCEN primarily recommends that institutions conduct re-reviews of a customer’s account opening documents. When investigating a suspected deepfake image, reverse image searches and other open-source research may reveal that an identity photo matches an image in an online gallery of faces created with GenAI.

In family emergency schemes, scammers may use deepfake voices or videos to impersonate a victim’s family member, friend, or other trusted individual.

Financial institutions and third-party providers of identity verification solutions may also use more technically sophisticated techniques to identify potential deepfakes, such as examining an image’s metadata or using software designed to detect possible deepfakes or specific manipulations.

FinCEN said indicators warranting further due diligence may include the following:

- Access to an account from an IP address that is inconsistent with the customer’s profile.

- Patterns of apparent coordinated activity among multiple similar accounts.

- High payment volumes to potentially higher-risk payees, such as gambling websites or digital asset exchanges.

- High volumes of chargebacks or rejected payments.

- Patterns of rapid transactions by a newly opened account or an account with little prior transaction history.

- Patterns of withdrawing funds immediately after deposit and in manners that make payments difficult to reverse in cases of suspected fraud, such as through international bank transfers or payments to offshore digital asset exchanges and gambling sites.

FinCEN reminds firms to apply more scrutiny to inconsistencies among multiple identity documents submitted by the customer and between the identity document and other aspects of the customer’s profile.

FinCEN recommends that institutions use multifactor authentication (MFA), including phishing-resistant MFA, and live verification checks in which a customer is prompted to confirm their identity through audio or video, are two such processes. Although illicit actors may be able to respond to live verification prompts or access tools that generate synthetic audio and video responses on their behalf, their responses may reveal inconsistencies in the deepfake identity.

And businesses should flag the use of third-party webcam plugins, which can let a customer display previously generated video rather than live video.

Deepfakes in phishing scams

FinCEN further warms institutions that criminals may also target financial institution customers and employees through sophisticated, GenAI-enabled social engineering attempts in support of other scams and fraud typologies, such as business email compromise schemes, spear phishing attacks, elder financial exploitation, romance scams, and virtual currency investment scams.

For example, in family emergency schemes, scammers may use deepfake voices or videos to impersonate a victim’s family member, friend, or other trusted individual. Similarly, criminals have reportedly used GenAI tools to target companies by impersonating an executive or other trusted employee and then instructing victims to transfer large sums or make payments to accounts ultimately under the scammer’s control.

Recent research reveals that 92% of all organizations have been victims of phishing attacks, with financial services most affected, and 99% of cybersecurity leaders are said to be stressed about email security.