California, the world’s fifth biggest economy, is getting closer to passing a landmark AI law that could set the stage for how other states move forward with their own rulemaking.

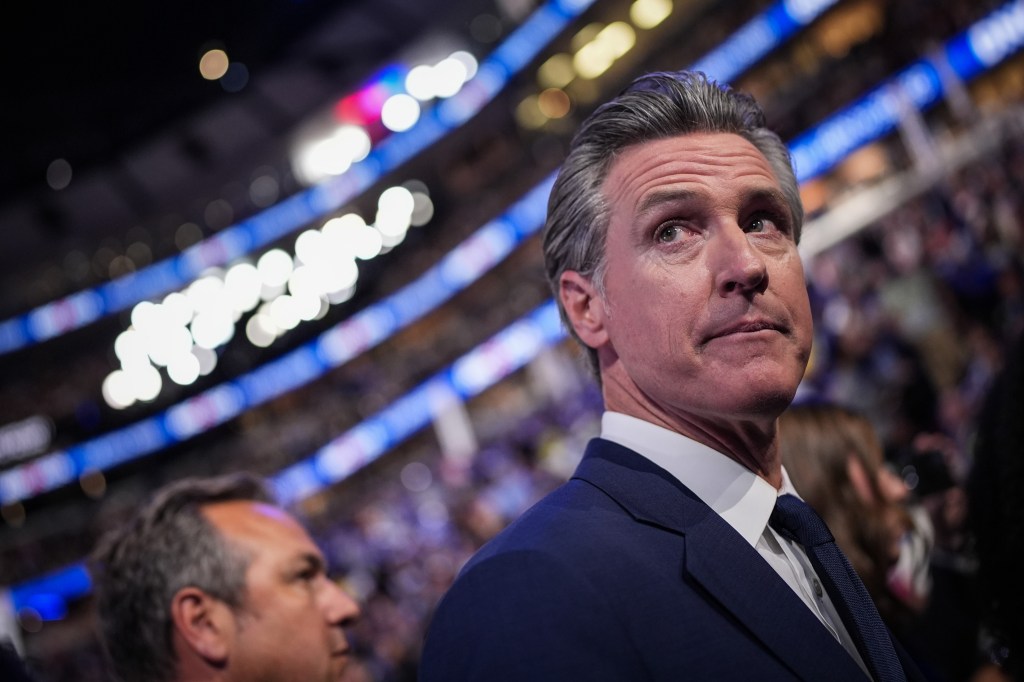

SB 1047 is the California bill seeking to regulate AI. It has passed both the state Assembly and Senate, and now heads to Governor Gavin Newsom’s desk to be signed or vetoed. The state Senate just recently passed the legislation in a 29-9 vote, and Newsom has until September 30 to sign or veto the bill. He has not indicated if he supports it.

Debate over the bill

Debate over the bill has been pretty heated, with many tech groups and companies either opposed to its provisions or seeking changes. For instance, Anthropic has offered some support for the bill, following certain changes it hopes to see, and OpenAI opposes it, saying it could “stifle innovation.”

But there are some notable backers, including Elon Musk. He said that California “should probably pass the SB 1047 AI safety bill,” arguing that AI’s risk to the public justifies regulation. “This is a tough call and will make some people upset,” he said. (To be sure, as Axios points out, Musk previously called for a six-month pause on AI development, while his business endeavors have proceeded in the AI arena, often with fewer safeguards.)

Mainly, the tech groups are pushing for federal legislation instead, and not a hodge-podge of state-based rules. But others point out that the proposed legislation is a decent first step toward ensuring greater safety in creating and operating the tools, and could be helpful as companies and consumers wait for Congress to catch up to Europe, which has already established some AI guardrails.

[The SB 1047] “would have significant unintended consequences that would stifle innovation and will harm the US AI ecosystem.”

Dr. Li

Landon Klein, director of US policy at the Future of Life Institute, told Axios the bill’s authors landed at an impressive balance, and naysayers simply don’t want to be regulated.

“I think the concern is that if [AI companies] need to make sure that their systems are safe before they put them out, that they then hold some responsibility and some accountability for the potential harms that occur when they put those models out, and frankly, they just don’t want to face that accountability,” he said.

Senator Pelosi and Dr. Li

Nancy Pelosi has urged her home state to reject the bill, with a number of industry and business groups also voicing opposition, along with the US Chamber of Commerce, the Software and Information Industry Association, TechFreedom, and others. San Francisco Mayor London Breed said she is also opposed to it and eight members of Congress who represent California districts earlier this month asked the governor to veto it.

Pelosi quoted Stanford scholar Fei-Fei Li in a press release, someone who is considered California’s top AI academic and researcher and widely credited with being the “Godmother of AI,” and who has served as an adviser to President Biden on AI matters.

Dr. Li has said that SB 1047 “would have significant unintended consequences that would stifle innovation and will harm the US AI ecosystem.” Pelosi pointed out that there is other legislation pending in California to be considered, and that big tech should not dominate the conversation to any exclusion of academia and small businesses.

California AI moves

Senator Scott Wiener, a Democrat from San Francisco and author of the SB 1047, said the purpose of the proposed law is to codify safety testing that companies already agreed to with President Biden and leaders of other countries.

“With this vote, the Assembly has taken the truly historic step of working proactively to ensure an exciting new technology protects the public interest as it advances,” Wiener said in a press release.

In addition to SB 1047, earlier this week the California legislature moved to pass laws that require large online platforms such as Facebook to take down deepfakes related to elections and create a working group to issue guidance to schools on how to safely use AI.