Data centers have emerged as the backbone of modern life, powering everything from social media and cloud computing to telemedicine and artificial intelligence tools. Early data centers typically comprised one huge supercomputer, but modern versions are now home to thousands of servers connecting to various communication networks.

A data center is a centralized physical facility that stores businesses’ critical applications and data. It serves as a location where computing and networking equipment is used to collect, process, and store data, as well as to distribute and enable access to resources.

IDC’s Data Age paper predicted a tenfold increase in data levels between 2018 and 2025 and now predicts 175 zettabytes of data will be in existence this year; this number, it tells us, can be best illustrated by an attempt to download that data at current average internet speed. It would take 1.8 billion years.

Data centers communicate across their on-premises facilities, plus their various cloud locations and cloud providers. Their importance to technology creation and data preservation cannot be questioned, but the rapid growth of data processing and storage presents significant challenges in terms of energy demand and grid integration.

Lawrence Berkeley National Laboratory’s report on data center energy usage finds that data centers consumed about 4.4% of total US electricity in 2023 and are expected to consume approximately 6.7 to 12% of total US electricity by 2028.

To address these concerns, many data centers are now prioritizing energy efficiency and renewable energy, including Global Relay.

Global Relay’s green data center

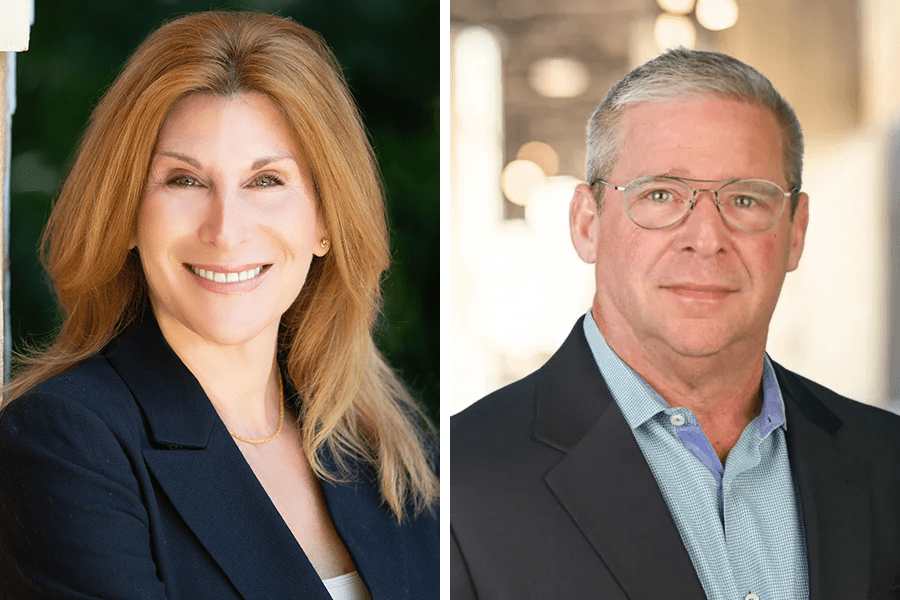

Global Relay has five data centers, with its North Vancouver facility being an energy-efficient or green center, and the other locations being Toronto, Winnipeg, Seattle and Chicago. The Vancouver center went live on Earth Day in 2014. It was the culmination of a three-year project that was a $24m capital investment into the company’s commitment to building cloud solutions with scalability, reliability and security, said Duff Reid, the company’s co-founder.

Global Relay.

Photo: Global Relay

This center uses a cooling technology called evaporative cooling which involves direct contact between water and the air stream that cools the data center. Specifically, hot air passes through the evaporative media, contacts water, evaporates, and cools the air directly into the server room.

“Evaporative cooling is a greener option, so we went with it for the North Vancouver center,” Reid said. This is on top of using direct-to-chip cooling methods that leverage liquid’s high thermal transfer properties to remove heat from individual processor chips.

Traditional data centers typically use Computer Room Air Conditioner (CRAC) units — air conditioning systems that regulate the temperature and humidity, ensuring the heat generated by the computer equipment won’t damage the electronics and cause system failures, Reid explained.

Reid explained that each data center has to have exceptional performance, whether it is a green facility or not.

“Customers expect to be able to receive the communications they store with Global Relay within a couple of seconds,” says Global Relay CEO and Founder Warren Roy.

Reid explained that liquid cooling keeps processors’ temperatures at optimal levels, regardless of load and external climates.

One challenge Reid noted, that won’t come as a surprise to compliance professionals, is staying on top of the ASHRAE (American Society of Heating, Refrigerating, and Air Conditioning Engineers) standards and guidelines for energy efficiency and greenhouse gas emissions in buildings.

Data centers in the Trump era

Last month, President Donald Trump announced there was a $40 billion investment pledge in US-based data centers coming from his business partner Hussain Sajwani. Trump also unveiled another $500 billion in planned investments in private-sector AI infrastructure and then signed an executive order rolling back Biden-era policies that took a more cautious approach to AI. It directed “the development of an AI Action Plan to sustain and enhance America’s AI dominance.”

The status of cloud computing promises to shift dramatically in the next decade as advances in quantum computing proceed.

This month, Dominari Holdings launched American Data Centers Inc, in which Donald Trump Jr and Eric Trump have invested, with Dominari saying its plans were “to address the growing demand for high-performance computing infrastructure to support artificial intelligence, cloud computing and cryptocurrency mining.”

Notably, President Trump has supported the idea of co-located data centers. These involve the strategic placement of data centers alongside nuclear power plants, providing a direct energy source while reducing strain on the national electric grid.

And soon after that pronouncement, the US Secretary of Energy put out an order implementing Unleashing American Energy, announcing that the Energy Department will be taking action to “unleash commercial nuclear power in the United States” and “streamline permitting.”

Supply and efficiency

Efforts to improve efficiency and computing power often come into conflict in the market. The frenzy to keep ahead of the pace of AI development has led investors to throw money at data centers and chip production in a manner critics say fails to nurture innovation.

DeepSeek, the Chinese-developed AI app, was able to perform just as well as ChatGPT at a fraction of its need for GPUs. That led to a chilling of “data center euphoria,” in late January as investors feared a looming asset oversupply reminiscent of the green investing bubble.

That stunning revelation caused a mass selloff of NVIDIA stock, wiping out $593 billion in value as investors evinced skepticism over the necessity of massive investments in computing power and the promise of slow payouts. Indeed, Deloitte noted in a report predicting an “AI gap year” that investment in AI infrastructure is currently 10 times higher than returns, with the gap continuing to widen.

But investment managers like Blackstone have remained positive about their strong commitment to data center infrastructure. Optimistic investors predict the gap between efficiency and need for supply will eventually be closed.

Time will tell if that instinct proves correct. NVIDIA’s stock price has rebounded since its January crash, but serious questions linger about oversupply. Microsoft recently cancelled leases for US data center capacity, and Reuters reports that hedge funds have been exiting media and tech stocks at the fastest pace in six months, following an apparent decline in demand for the company’s chips.

NVIDIA’s earnings report, due to be released next week, will be a bellwether indicating the market’s near future.

Looking to the future

The status of cloud computing promises to shift dramatically in the next decade as advances in quantum computing proceed. Microsoft and Google recently reported breakthroughs in quantum computing that could exponentially boost computing power and efficiency, although many physicists are skeptical that the technology as it currently exists could be applied at scale.

The foundation of quantum computing is the “qubit,” which unlike the ones-and-zeroes of classical computing, can be “entangled,” allowing a computer to address multiple problems simultaneously.

If significant gains in quantum computing could be made, it would introduce a host of new practical challenges to how data centers are built and managed. The paradigm shift would require commensurate updates in cooling technology, as to function, quantum computers must be cooled to near-absolute-zero states.

A transition to quantum computers would also require a complete revamp of cybersecurity protocols, as quantum computers would be able to bypass even highly complex security controls through ultra-high-speed brute forcing.

And although on the distant horizon, G7 members have already started to prepare for the complex security challenges that quantum computing might create.