The first International AI Safety Report, co-authored by 96 independent AI experts from around the world, and supervised by an International Expert Advisory Panel, has been published.

The creation of the report was supported by heads of state and world agencies who were present at the Bletchley Park AI Safety Summit in the UK in November 2023. An interim publication came out in May 2024.

The 298-page document considers AI risks and AI safety, with the focus on general-purpose artificial intelligence, or the sort of AI technology that is most commonly used to perform a variety of tasks in modern society.

The authors acknowledge that AI provides “many potential benefits for people, businesses, and society,” but argue that the risks associated with general-purpose AI have not been properly studied or understood. This is despite its widespread use. Much of the discussion in the report revolves around three central questions:

- What can general-purpose AI do?

- What are the risks associated with general-purpose AI?

- What mitigation techniques are there against these risks?

“We, the experts contributing to this report, continue to disagree on several questions, minor and major, around general-purpose AI capabilities, risks, and risk mitigations. But we consider this report essential for improving our collective understanding of this technology and its potential risks,” the authors said.

Professor Yoshua Bengio, chair of the independent experts responsible for preparing the report, said: “AI remains a fast-moving field. To keep up with this pace, policymakers and governments need to have access to the current scientific understanding on what risks advanced AI might pose.”

What are the risks?

The report puts the risks posed by general-purpose AI into three categories, including risks posed from malicious use, risks from malfunctions and systemic risks.

The first category of risk relating to malicious use of general-purpose AI includes harm to individuals through fake content, manipulation of pubic opinion, cyber offence and biological and chemical attacks.

“Malicious actors can use general-purpose AI to generate fake content that harms individuals in a targeted way,” says the report, adding that, “the scientific evidence on these uses is limited.” The report goes on to say that, “In recent months, limited progress has been made in scientifically capturing the extent of the problem.”

The second category of risks posed by general-purpose AI arise from malfunctions, including risks such as reliability issues, bias and loss of control over the technology.

“Reliability issues occur because of technical shortcomings or misconceptions about the capabilities and limitations of the technology.”

International AI Safety Report

Authors warn that: “Relying on general-purpose AI products that fail to fulfil their intended function can lead to harm,” such as making up facts, generating incorrect codes or providing inaccurate medical information.

“Such reliability issues occur because of technical shortcomings or misconceptions about the capabilities and limitations of the technology,” the report explains.

These reliability issues are hard to predict because policymakers have to deal with “the lack of standardised practices for predicting, identifying, and mitigating reliability issues.”

The third and final category of risks posed by general-purpose AI deals with system risks, including labor market risks, the global AI R&D divide, market concentration and single points of failure, risks to the environment, risks to privacy and risks of copyright infringement.

the authors discuss potential future scenarios with “general-purpose AI that outperforms humans on many complex tasks,” and predict that “the labour market impacts would likely be profound.”

According to the report, “recent evidence suggests rapidly growing adoption rates,” while “mitigating negative impacts on workers is challenging given the uncertainty around the pace and scale of future impacts.”

Global inequality

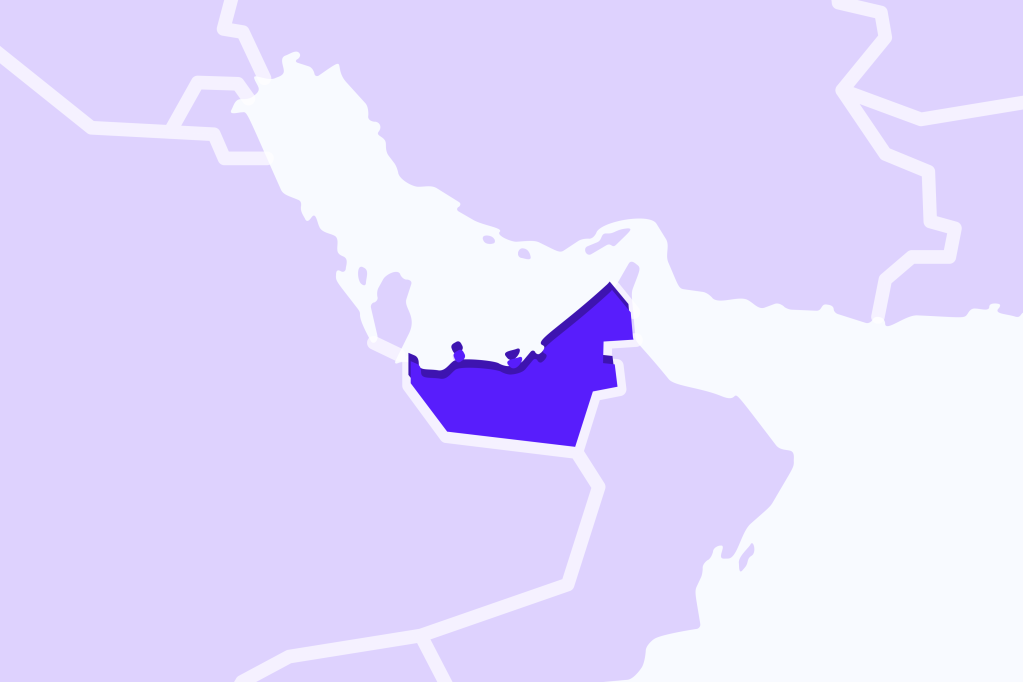

Also, “large companies in countries with strong digital infrastructure lead in general-purpose AI R&D, which could lead to an increase in global inequality and dependencies.”

The fact that “market shares for general-purpose AI tend to be highly concentrated among a few players” is also considered a problem, and “can create vulnerability to systemic failures.”

Another risk is that “general-purpose AI is a moderate but rapidly growing contributor to global environmental impacts through energy use and greenhouse gas (GHG) emissions.”

And: “General-purpose AI systems can cause or contribute to violations of user privacy” as it can “leak sensitive information acquired during training or

while interacting with users.”

Last but not least: “The use of vast amounts of data for training general-purpose AI models has caused concerns related to data rights and intellectual property” because “AI content creation challenges traditional systems of data consent, compensation, and control.”

Risk management approaches

According to the report, general approaches to mitigating AI-related risks include dealing with technical as well as societal challenges, their identification and assessment as well as risk mitigation and monitoring. The authors argue that “the context of general-purpose AI risk management is uniquely complex due to the technology’s rapid evolution and broad applicability.”

From a technical aspect, “autonomous general-purpose AI agents may increase risks” because “developers understand little about how their models operate internally.”

The report also warns that “harmful behaviours, including unintended goal-oriented behaviours, remain persistent, there is an ‘evaluation gap’ for safety, and ‘system flaws can have a rapid global impact.”

From a societal aspect, “As general-purpose AI advances rapidly, risk assessment, risk mitigation, governance, and enforcement efforts can struggle to keep pace.”

Another challenge is that “the rapid growth and consolidation in the AI industry raises concerns about certain AI companies becoming particularly powerful because critical sectors in society are dependent on their products.”

Legal liability

Also, “the inherent lack of both algorithmic transparency and institutional transparency in general-purpose AI makes legal liability hard to determine, potentially hindering governance and enforcement.”

In terms of identification and assessment, authors warn that existing quantitative methods to assess general-purpose AI risks, despite being very useful for certain purposes, “have significant limitations.”

They believe that “rigorous risk assessment requires combining multiple evaluation approaches, significant resources, and better access.”

Also, “the absence of clear risk assessment standards and rigorous evaluations is creating an urgent policy challenge, as AI models are being deployed faster than their risks can be evaluated.”

In terms of risk mitigation and monitoring, the report argues that, “current training methods show progress on mitigating safety hazards from malfunctions and malicious use but remain fundamentally limited.”

Other observations on mitigation and monitoring include:

- “Adversarial training provides limited robustness against attacks.

- “Since the publication of the Interim Report (May 2024), recent advances reveal both progress and new concerns.

- “Key challenges for policymakers centre around uncertainty and verification.”

“AI does not happen to us; choices made by people determine its future.”

International AI Safety Report

The authors believe that there is a great amount of uncertainty around the immediate as well as the long-term future of general-purpose AI, with both very positive and very negative outcomes on the cards.

They argue that if researchers and policymakers identify and manage risk properly, general-purpose AI can greatly benefit humans through “education, medical applications, research advances in fields such as chemistry, biology, or physics, and generally increased prosperity.”

But if they fail to do so, the technology can also pose risks through “malicious use and malfunctioning, for instance through deepfakes, scams and biased outputs.”

The report concludes by insisting that: “AI does not happen to us; choices made by people determine its future.”