In December 2022, OpenAI released ChatGPT, a powerful AI-powered chatbot that could handle users’ questions and requests for information or content in a convincing and confident manner. The number of users signing up to use the tool increased very rapidly, with users using the tool to write letters, edit text, generate lists, prepare presentations and generate code, among a myriad of other things.

But whilst the use of ChatGPT could mean efficiency gains and cost savings for businesses, its use by organizations and their staff does give rise to a number of different issues, which organizations must consider and manage.

We’ve identified the types of issues organizations need to watch out for in relation to the use of ChatGPT and what they should do to manage those issues.

GPT or DALL-E

First, what makes large language or image models like GPT or DALL-E different to other forms of AI or search?

In many ways this type of AI is very similar to prior AI in terms of development and structure. The large language model(s) underneath GPT consist of highly complex artificial neural networks (ANN). There have been many other ANNs prior to OpenAI’s models. The key difference between OpenAI’s models and other ANNs is the vast size of GPT-3 and GPT-4’s training corpus and the special mathematical features of the individual ANN nodes called “transformers” that make them particularly adept at analyzing and generating language.

“Transformers” were actually invented by the “Google Brain” division around 2017, but then open-sourced. At bottom, the real difference between the OpenAI GPT models and prior ANNs is the sheer efficacy of GPT in analyzing, generating, and predicting language. In terms of usage and adoption, OpenAI GPT models have likely already outstripped all other models to date, by multiple orders of magnitude. Although the potential issues associated with training data, bias, overfitting, etc. are generally similar to ANNs that came before, those issues will now be relevant to organizations and in contexts where such considerations to date have been irrelevant or unknown.

As noted, a key feature is the accessibility of the recently released large language models. OpenAI’s ChatGPT and DALL-E have consumer interfaces that allow anyone to create an account and use the technology to generate answers. OpenAI also have an enterprise API version of GPT-4 where more sophisticated integrations can be made, including using the technology to select answers from a more limited specialist training or reference data set. Due to the computing power required to train this type of AI, much use of these tools is on a subscription basis (eg GPT-4). However, there are some models, which are generally smaller and narrower in their application, which are open source and can be downloaded and hosted locally.

Here, we are discussing OpenAI’s ChatGPT and GPT-4 offerings. There are other large language models (LLMs) which will operate differently and have different legal terms and so not all the points below will be universally applicable to all LLMs.

ChatGPT consumer interface

So, let’s start with the employees using the ChatGPT consumer interface – what issues would that present?

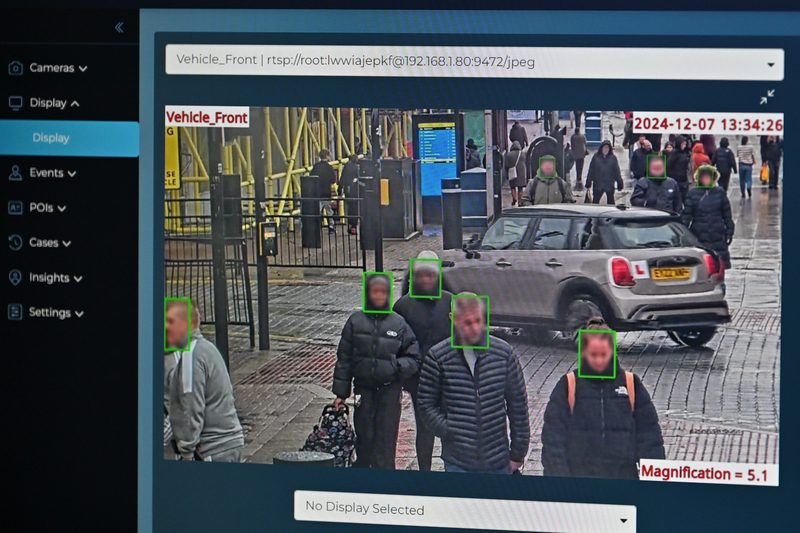

Confidentiality of input

From a user experience perspective, the ChatGPT service is similar to using a search engine or a translation application in so far as the queries or the translation text is input into the consumer interface. However, in ChatGPT-4 your employees could input up to 25,000 words worth of prompts, which is clearly a lot more than goes into a search engine query. Also, all use of Chat GPT by employees is on a logged in basis so OpenAI know exactly who made the query. A number of issues arise in connection with this.

First, the ChatGPT terms of use give OpenAI the right to use that input content to develop and improve the services. Therefore, anything that your employees input could be retained and accessed by OpenAI staff or their subcontractors for these purposes. Although it is possible to select an option to opt out of use for these purposes, it is not clear whether the input data is still retained. This could lead to disclosures of your business’s confidential information and also to breaches of contractual duties of confidentiality to third parties.

The ChatGPT terms of use give OpenAI the right to use that input content to develop and improve the services. Therefore, anything that your employees input could be retained and accessed by OpenAI staff or their subcontractors for these purposes.

Second, OpenAI does not give any security assurances in its terms of use (although, there is an extensive security portal and a statement that commercially reasonable security measures will be applied in the privacy policy that would help found a claim for misrepresentation in the event of a security breach). In any event, OpenAI’s liability is limited to $100 or the fees paid in the past 12 months and, as the contract is with the employee and not the enterprise, the enterprise would not itself be able to bring a claim in relation to confidentiality or security risks that may materialize.

If your employees use information relating to individuals in input prompts for business-related purposes, your organization would likely be the data controller of the inputted personal data and you would need to be clear that the relevant individuals are aware of this processing via privacy notices and also that the employee had grounds justifying the processing. The security concerns identified in the preceding paragraph will also need to be considered.

A corporate ChatGPT policy should make employees aware of the uncertainty as to how input prompts may be handled and should ban the use of personal information and any client or confidential information in such input prompts.

Incorrect or misleading outputs

GPT-3 and GPT-4 are not searching the internet, rather, they are generating content based on the data sources they were last trained on and the algorithm. Settings of GPT (called “hyperparameters”) determine how conservative or creative the output will be. Additionally, the quality and detail of the submitted prompt also has a huge impact on the quality of the response.

Using the output of ChatGPT without a framework for benchmarking the quality of the input (the prompt) and the accuracy of the output is a leap of faith. The output should therefore not be used unless reviewed by someone who understands how the model works together with someone possessing domain expertise in the subject matter who is in a position to gauge the accuracy/quality of the output.

Biased and/or offensive outputs

ChatGPT is trained on real world data which reflects the biases, inequalities and offensive conversations and content that are present in it. OpenAI researchers have set rules that are meant to weed out such content but the subjectivity of the determination means it will never satisfy everyone. In fact, it is quickly finding itself in the controversial world of content moderation. Such content could be communicated to others and you, the employer, may be vicariously liable.

As such, employees should once again be urged to check output before using it.

Non-unique outputs and detection of use

ChatGPT may (but won’t always) generate the same output to the same or similar prompts. There are also tools available to detect AI generated content, although they are not very accurate.

You should ensure that your employees understand that others may be able to detect that output is AI rather than human generated and to avoid using it in situations where this could be reputationally damaging. It may be safer to be transparent as to when ChatGPT has been used.

Ownership of output

Currently, OpenAI assigns all rights in output to the user (although it retains a right to use it for improving its services). However, in some jurisdictions, copyright may not subsist for non-human authored content. This would make enforcing rights against third parties more difficult.

Training data IP infringements

GPT was trained on copyright works. The generated output may be very similar or even identical to the training works. At a certain point, this may amount to copyright infringement by OpenAI and by the user. Whilst fair dealing exceptions might apply to personal use, they will not apply to commercial use in some countries.

Infringement cases have already started. Notably, Getty Images has brought copyright infringement proceedings against Stability AI in the UK High Court for the use of images from its image library (including the reproduction of the Getty Images watermark in some of the Stability-AI-generated images).

Where output is going to be valuable, widely reproduced or disseminated, this latent IP infringement risk may make using it too risky. You should ensure your employees declare whether output is generated by AI so that these types of risks can be evaluated before such use is made.

Training data privacy infringements

The Italian data protection authority has temporarily banned the use of ChatGPT in Italy for a number of reasons including that the individuals whose information was in the training data set were not given notice by OpenAI that their information was held and being used for training and that OpenAI does not appear to have a legal ground to justify such processing.

You should therefore ensure that your policy prohibits making queries about individuals through ChatGPT so that your organization does not become subject to the same potential ChatGPT data protection infringements. Additionally, AI models have been previously demonstrated to be particularly adept at re-identification of data subjects even when the source data set was supposedly de-identified in accordance with existing standards.

Use of ChatGPT in ways that affect or potentially affect or involve personal data should not be undertaken in the enterprise without review of the use-case (preferably by someone with knowledge of AI-based re-identification attacks) and approval of legal after considering same.

Here is a checklist of the risks outlined above with options to mitigate:

| Risk | Options to mitigate |

| Prompt input goes to OpenAI; OpenAI security may fail; OpenAI caps liability at $100. | If possible, negotiate enterprise license with OpenAI with robust security and higher caps. Sanitize the content of prompts (no personal, no corporate confidential). |

| Prompt input goes to OpenAI; OpenAI may use prompt input in training data which may therefore end up in answers to prompts of third parties. | Ensure users opt out of use for training (but is a manual override – many will fail to do so) If possible, negotiate corporate access where all use through corporate registered accounts is opted out. |

| Outputs may be inaccurate or misleading. | Warn users; ban certain uses; do not allow use unchecked by SME. |

| Outputs may be biased or offensive. | As above, but consider who should check against bias. |

| Outputs may not be unique. | Warn users not to try to pass off the output as original/ consider labelling where AI has been used. |

| Outputs may be different given same input/prompt. | Warn uses that the model’s output will often vary, even when given identical inputs (prompts). |

| Outputs may be detected as generated by AI. | Warn users not to try to pass off the output as original/ consider labelling where AI has been used. |

| IP rights may not subsist in output. | Warn that stopping a third party from reproducing may be harder (in absence of contractual controls). |

| Training data infringes third party IP rights. | Warn that (a) OpenAI may have to stop providing service abruptly, (b) potential IPR infringement claim against user/ employer, particularly where output is valuable, published or widely disseminated. Limit any use outside the organization without prior approval. |

| Training data infringes third party data privacy rights. | Warn that (a) OpenAI may have to stop providing service abruptly, (b) if personal data put into prompts or is returned in output, potential DP claim against user/ employer, particularly where output is sensitive/ private, published or widely disseminated. Limit any use outside the organization without prior approval. |

| Valuable uses may merit trying to overcome the issues above. | Filtering/ fine tuning output through other AI or data to constrain inaccuracy or improve accuracy may allow greater accuracy, removal of bias and inappropriate outputs. Deeper consideration of IP and DP existential risks to the technology before investing. |

| Precisely how output is generated is not known or completely predictable. | Requires SME checking before use/reliance. If used to make decisions about people automatically, data protection law requires organizations to provide an explanation of how the automated AI works (including the ChatGPT element), the key factors it will take into account and the mitigations applied against bias and inaccuracy risks and a right to contest the decision with a human. |

| Large Language Models like GPT can be particularly error-prone when dealing with mathematical reasoning and quantitative analysis. | GPT should not be used for mathematical reasoning and quantitative analysis. Special use-cases vetted by appropriate professionals would be an exception, but GPT should, by default, be mistrusted out of the box for quantitative type matters. |

| GPT is particularly good at writing different types of computer code, but may do so with errors or vulnerabilities. | GPT may make a power coding assistant, but code should still be unit-tested and vetted for vulnerabilities using standard and accepted methods. |

An approach that marries theory with practice would be to risk rate the types of uses that employees might make of applications such as ChatGPT and classify them into prohibited uses, uses that require approval and uses that can be made without approval. Employees would need to be trained on the risks and the categories, required to log where they are using it and some form of monitoring considered.

So, those were the risks in employees using the ChatGPT consumer interface; what is the difference if the IT or data science team is using GPT via the enterprise API in a more sophisticated implementation?

The issues above still apply but there are some differences:

Confidentiality of input

Where data is input via the enterprise API, OpenAI does not seek to use it to improve the OpenAI services (however, the terms still do not state when it is deleted). To the extent personal input data is used, OpenAI characterizes itself as a data processor of the enterprise user and will enter into a data processing addendum with it. That does include a security schedule (which cross refers to the security portal) and provides for the return of personal data but only on termination of the services (ie there is no scope for the enterprise user to direct OpenAI to delete its personal data during the term); it also does not apply to other input data. So, unless more robust terms are negotiated, the same level of caution as to what is input is required.

Incorrect or misleading outputs

To some extent this can be mitigated through fine tuning (further tuning the model on a specific data set) or through prompt augmentation (adding a large amount of relevant information to the prompt to increase likely relevance/quality of the response) although again, it would be essential to understand OpenAI’s security stance before providing greater quantities of input prompt information to it.

Explainability

Although there are explanations of how ChatGPT was trained, it is a proprietary technology and not fully transparent as to the underlying processes that were used to build it, or how the model works or responds to any particular prompt. It is entirely possible that in any particular circumstance, ChatGPT will provide an inaccurate response.

The use case that ChatGPT is being applied to will need to be appropriate for uncertainty or the output should be sufficiently constrained or checked so that accuracy is brought up to an appropriate level (so users can be truthfully assured that it will be consistently achieved). At least in Europe where the application is used to make decisions about individuals with a significant impact and without human input, the user organization will need to provide an explanation of;

- how the automated AI works;

- the key factors it will take into account;

- the mitigations applied against bias and inaccuracy risks; and

- a right to contest the decision with a human.

A data protection impact assessment would be required before rolling out such an implementation. We would strongly recommend this forms part of an overarching AI impact assessment pulling together the details of the AI implementation and feeding them into assessments of the other areas identified in this post.

Our take

Similar to consumer translation services, personal messaging services and search, ChatGPT has the potential to facilitate corporate data leakage. The other risks are more nuanced. Banning its use is probably unrealistic, so in order to realize the opportunities this technology offers, a clear usage policy with training will be essential.

Marcus Evans, is EMEA head of information governance, privacy and cybersecurity based in London. Lara White, partner, is a communications, media and technology lawyer based in London. Steven B. Roosa, is head of NRF digital analytics and technology assessment platform, United States, based in New York.