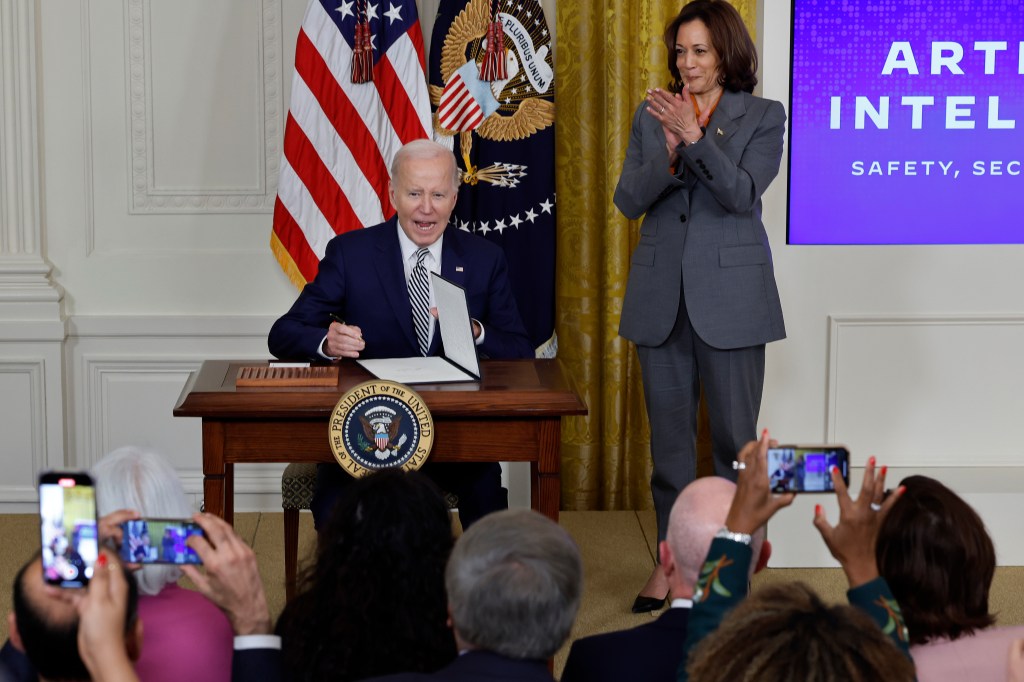

Guidance to help AI developers evaluate and mitigate the risks stemming from generative AI and dual-use foundation models has been issued by the US Department of Commerce. The measures were announced 270 days after President Biden issued his Executive Order (EO) on the Safe, Secure and Trustworthy Development of AI after work by the National Institute of Standards and Technology (NIST).

The new guidance and software aims to help improve the safety, security and trustworthiness of artificial intelligence (AI) systems, and arises from the work of the US AI Safety Institute.

Adversarial attacks

NIST just launched a software package designed to measure how adversarial attacks can degrade the performance of an AI system.

It was created, the Commerce announcement notes, because one of the vulnerabilities of an AI system is the model at its core. By exposing a model to large amounts of training data, it learns to make decisions. But if adversaries poison the training data with inaccuracies, the model can make incorrect, potentially disastrous, decisions.

“Testing the effects of adversarial attacks on machine learning models is one of the goals of Dioptra, a new software package aimed at helping AI developers and customers determine how well their AI software stands up to a variety of adversarial attacks,” NIST says.

The open-source software is available for free download. It is designed to help the community (including government agencies and small- to medium-sized businesses) conduct evaluations to assess AI developers’ claims about their systems’ performance by allowing a user to determine what sorts of attacks would make the model perform less effectively.

And it quantifies the performance reduction, so that the user can learn how often and under what circumstances the system would fail.

Patents and emerging tech

In addition, Commerce’s US Patent and Trademark Office (USPTO) issued a guidance update on patent subject matter eligibility to address innovation in critical and emerging technologies, including AI.

USPTO’s guidance update is designed to assist USPTO personnel and stakeholders in determining subject matter eligibility under patent law of AI inventions.

This latest update builds on previous guidance by providing further clarity and consistency to how the USPTO and applicants should evaluate subject matter eligibility of claims in patent applications and patents involving inventions related to AI technology.

The guidance update also announces three new examples of how to apply this guidance throughout a wide range of technologies.

Managing the risks of GenAI

NIST also published the AI RMF Generative AI Profile (NIST AI 600-1) to help organizations identify unique risks posed by generative AI and proposes actions for generative AI risk management that best aligns with their goals and priorities. The guidance centers on a list of 12 risks and just over 200 actions that developers can take to manage them.

“These guidance documents and testing platform will inform software creators about these unique risks and help them develop ways to mitigate those risks while supporting innovation.”

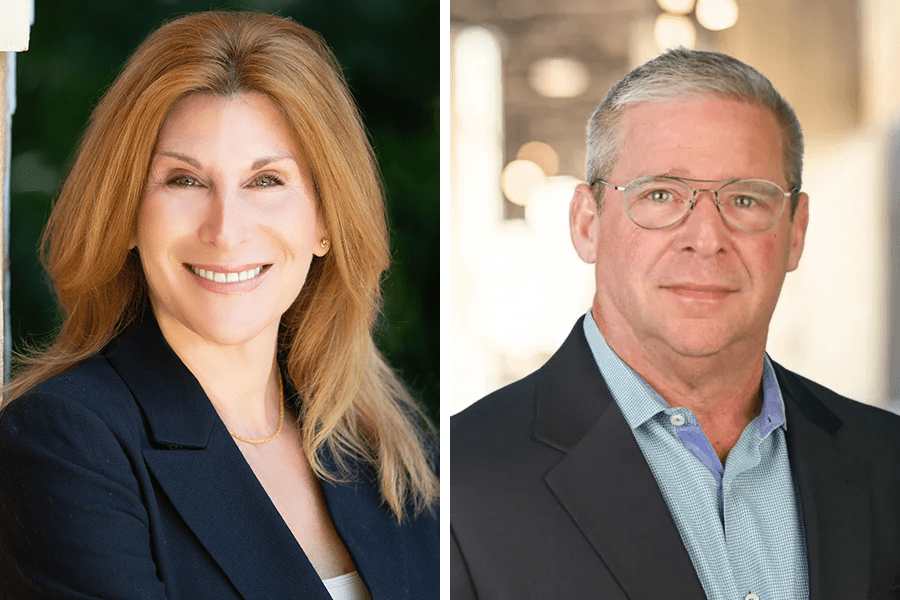

NIST Director, Laurie E. Locascio

The 12 risks include a lowered barrier to entry for cybersecurity attacks, the production of mis- and disinformation or hate speech and other harmful content, and generative AI systems confabulating or “hallucinating” output. After describing each risk, the document presents a matrix of actions that developers can take to mitigate them, mapped to the AI RMF.

Dual-use foundation models

Another document acknowledges that AI foundation models are powerful tools that are useful across a broad range of tasks and are sometimes called “dual-use” because of their potential for both benefit and harm.

NIST’s US AI Safety Institute released the initial public draft of its guidelines on Managing Misuse Risk for Dual-Use Foundation Models to offer voluntary best practices for how foundation model developers can protect their systems from being misused to cause deliberate harm to individuals, public safety and national security.

The draft guidance offers seven key approaches for mitigating the risks that models will be misused, along with recommendations for how to implement them and how to be transparent about their implementation.

“Together, these practices can help prevent models from enabling harm through activities like developing biological weapons, carrying out offensive cyber operations, and generating child sexual abuse material and non-consensual intimate imagery,” the press release notes.

Global engagement on AI standards

The final publication revolves around the fact that AI systems are transforming societies around the world. A Plan for Global Engagement on AI Standards (NIST AI 100-5) is designed to drive the worldwide development and implementation of AI-related consensus standards, cooperation and coordination, and information sharing.

“AI is the defining technology of our generation, so we are running fast to keep pace and help ensure the safe development and deployment of AI. Today’s announcements demonstrate our commitment to giving AI developers, deployers, and users the tools they need to safely harness the potential of AI, while minimizing its associated risks,” said US Secretary of Commerce Gina Raimondo.

“For all its potentially transformational benefits, generative AI also brings risks that are significantly different from those we see with traditional software,” said Under Secretary of Commerce for Standards and Technology and NIST Director Laurie E. Locascio.

“These guidance documents and testing platform will inform software creators about these unique risks and help them develop ways to mitigate those risks while supporting innovation.”

Agency guidance on AI

As just a refresher, here are some other pieces of AI guidance issued by US regulatory agencies this past year:

- FINRA issued Regulatory Notice 24-09 in June to remind members of regulatory obligations when using generative AI and large language models.

- In May, FINRA disseminated two Rule 2210 Interpretive Guidance documents to emphasize the need for strict oversight of chatbot interactions and AI-generated content that customers use and see.

- In January 2024, the SEC issued an Investor Alert on AI and investment fraud, discussing the growing concern with bad actors exploiting the AI hype to manipulate markets and deceive investors.

- A proposed SEC rule, intended to address conflicts of interest caused by artificial intelligence and other technology, has received pushback since being proposed last July. It is expected to be finalized this year, albeit in a new and likely watered-down manner.

- In May, the Commodity Futures Trading Commission’s (CFTC) Technology Advisory Committee released a report outlining recommendations on the responsible integration of AI in financial markets.

- The Federal Trade Commission (FTC) this month sought details from several financial services firms on how they use people’s personal data to sell them a product at a different price to that which other consumers might be seeing.