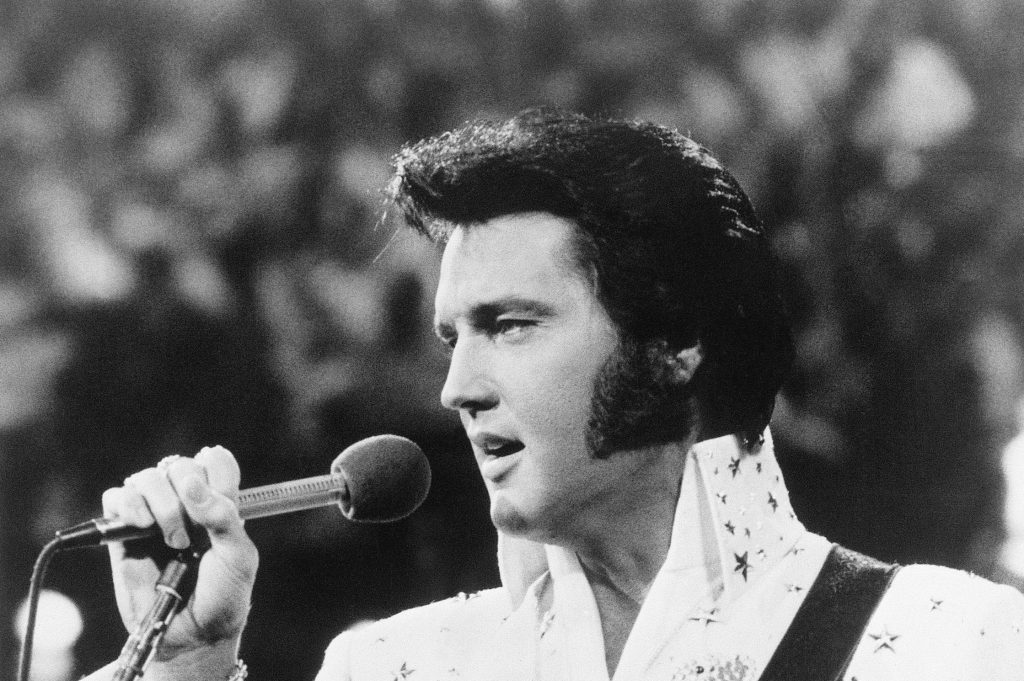

Tennessee recently amended its right of publicity statute with passage of the Ensuring Likeness, Voice and Image Security (ELVIS) Act of 2024. The existing law already protected individuals’ rights in their image and likeness, but as amended, the new law will specifically call out voice as another protected element.

Indeed, it will become the first right of publicity law to address copying someone’s likeness or voice with AI technologies in two ways.

Beginning July 1, the ELVIS Act will prohibit distributing software, tools and algorithms whose “primary purpose” is reproducing someone’s likeness or voice. The law previously prohibited infringing on someone’s likeness, but the amended version now prohibits publishing, transmitting or otherwise making available someone’s likeness or voice without the individual’s authorization as well.

The ELVIS Act also protects someone’s “voice,” which includes broadly a “sound attributable to” a person.

The law also now protects someone’s “voice,” which includes broadly a “sound attributable to” a person. This could be their actual voice, or a simulated version of it.

The impetus behind it is protecting people (celebrities and others) from AI-generated versions of the voice and likeness, which people and businesses have exploited for marketing purposes for more deceitful purposes, such as financial scams and disinformation campaigns in elections.

8 contenders line up for ETH ETFs

There are now eight initial registration forms for funds on file with the SEC, according to an email update from Axios. These S-1 applications are the next step of the regulatory process after last month’s listing approval, and the prospective issuers filed them all last week.

The eight contenders are Bitwise, Fidelity, Franklin Templeton, Invesco/Galaxy, BlackRock’s iShares, Grayscale, 21 Shares, and VanEck. They were not the only ones to try; other would-be issuers dropped out, such as Hashdex and Cathie Wood’s Ark Invest (which offers a bitcoin ETF in partnership with 21 Shares).

So far, Franklin Templeton is the only one to showcase its fees – 0.19% – a real low price that matches the price for its bitcoin ETF. BlackRock’s iShares Ether ETF has already lined up investors before the launch date, and Fidelity is using its own in-house custodian and index service providers instead of using vendors such as Coinbase or Kraken’s CF Benchmarks.

AI employees air concerns about the tech

A group of employees in the artificial-intelligence industry submitted a letter saying they are deeply concerned about AI’s threat to humanity. They also feel stifled in their efforts to fully communicate their concerns because of confidentiality agreements, a lack of whistleblower protections and the fear of retaliation.

More than a dozen current and former employees of OpenAI, Google’s DeepMind and Anthropic said AI companies need to create reporting channels for employees to safely voice concerns within their companies and to the public. Three leading AI experts endorsed the letter: AI scientist Stuart Russell, plus Yoshua Bengio and Geoffrey Hinton, pioneers of the technology who are credited with early breakthrough research.

In response to the letter, OpenAI said it agrees there should be government regulation.

Google’s college cyber clinics

Google just announced it is providing $15m to create 15 new cybersecurity clinics at universities across the country, building on the ten that have already received funding and mentoring from the company.

Cybersecurity clinics offer students the opportunity to hone their skills while providing local organizations with tools to protect themselves from potential cyber threats.

For example, students from the Google-supported clinic at Indiana University are helping local fire department employees put a plan in place if their online communications are compromised. And at Rochester Institute of Technology, students helped their local water authority review and improve their IT security configurations across their sites for threat detection and response processes.

The University of California at Berkeley, the University of Texas at El Paso, and Spelman College are a few of the other participating schools.

NY targets social media content directed at teens

New York plans to prohibit social media companies from using algorithms to steer content to children without parental consent under a tentative agreement reached by state lawmakers.

The legislation is aimed at preventing social media companies from serving automated feeds to minors, which lawmakers say sometimes directs children to violent and sexually explicit content. The bill, which is expected to be voted on this week and signed by Governor Kathy Hochul, would also prohibit platforms from sending minors notifications during overnight hours without parental consent.

The Senate’s draft of the bill claims that minors are uniquely vulnerable to the addictive quality of social media platforms.

“Children are particularly susceptible to addictive feeds because they provide a non-stop drip of dopamine with each new piece of media and because children are less capable of exercising the impulse control necessary to mitigate these negative effects,” the measure says.

The call for bolstered online protections for children gained momentum in the aftermath of revelations in 2021 from then-Facebook whistleblower Frances Haugen, who released internal research showing that the company understood the danger that Facebook-owned Instagram posed for some teen girls.

“Children are particularly susceptible to addictive feeds because they provide a non-stop drip of dopamine with each new piece of media.”

NY Senate’s bill, Stop Addictive Feeds Exploitation (SAFE) for Kids Act

Industry groups have raised questions about the constitutionality of the proposal, saying it infringes on the First Amendment rights of teens to access lawful information.

In Ohio, a federal judge earlier this year temporarily blocked a law that prohibits online platforms from creating accounts for users under 16 unless they obtain parental consent, saying the legislation is likely unconstitutional.