The public release of ChatGPT last November thrust AI in to the mainstream, and with it a conversation about the technology’s implications and how to regulate its use. Efforts to get a handle on the issue were given greater impetus this week as a UK Parliamentary committee set out 12 challenges of AI governance, and the co-founder of DeepMind, Mustafa Suleyman, called for a restriction in the sale of Nvidia chips as a means of controlling the use of AI.

In the UK, the House of Commons Science, Innovation, and Technology Committee (SITC) published an interim report this week calling on the government to step up its efforts to implement a regulatory regime for AI or risk the UK being left behind.

The report comes as the UK prepares to host the Global AI Safety Summit at Bletchley Park, once home to the country’s codebreaking operation. The government says the summit will build on ongoing work at international forums including the OECD, Global Partnership on AI, Council of Europe, and the UN and standards-development organisations, as well as the recently agreed G7 Hiroshima AI Process.

“AI is already improving lives from new innovations in healthcare to supporting efforts to tackle climate change, and November’s summit will make sure we can all realise the technology’s huge benefits safely and securely for decades to come.”

Michelle Donelan, UK Secretary of State for Science, Innovation and Technology Secretary

“International collaboration is the cornerstone of our approach to AI regulation, and we want the summit to result in leading nations and experts agreeing on a shared approach to its safe use,” Technology Secretary Michelle Donelan said. “AI is already improving lives from new innovations in healthcare to supporting efforts to tackle climate change, and November’s summit will make sure we can all realise the technology’s huge benefits safely and securely for decades to come.”

Photo: gov.uk

The SITC report wants AI-specific legislation to be put before the next session of Parliament as a matter of priority. It says: “A tightly focused AI Bill in the next King’s Speech would help, not hinder, the Prime Minister’s ambition to position the UK as an AI governance leader. Without a serious, rapid, and effective effort to establish the right governance frameworks – and to ensure a leading role in international initiatives – other jurisdictions will steal a march and the frameworks that they lay down may become the default even if they are less effective than what the UK can offer.”

The report lays out 12 challenges of AI governance that must be met:

- Bias: AI can introduce or perpetuate biases that society finds unacceptable.

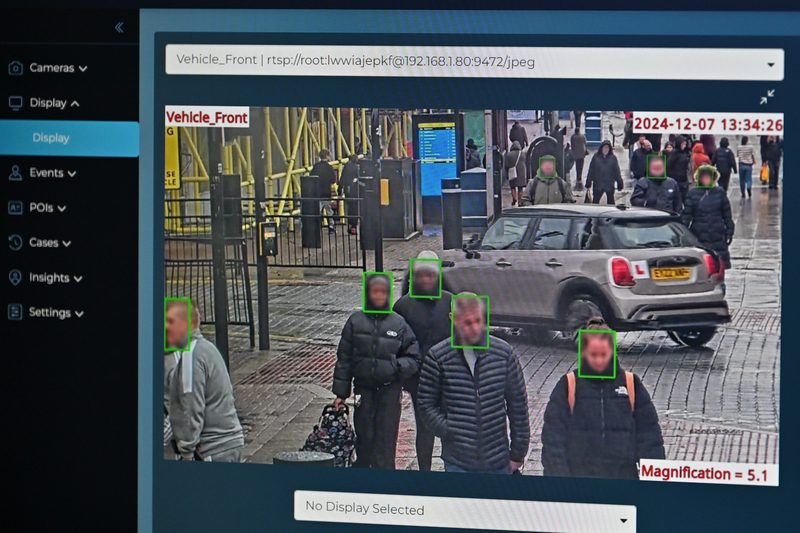

- Privacy: AI can allow personal information about individuals to be used in ways the public does not accept.

- Misrepresentation: AI can generate material that deliberately misrepresents behaviour, opinions, or character.

- Access to data: Just a few organization hold the very large datasets AI models need.

- Access to compute: AI requires significant compute power, access to which is limited to a few organizations.

- Black box: Some AI models and tools cannot explain why they produce a particular result, which is a challenge to transparency requirements.

- Open-source: Openly available code may promote transparency and innovation, allowing it to be proprietary may concentrate market power but allow more dependable regulation of harms.

- Intellectual property and copyright: Policy must establish the rights of the originators of content, and these rights must be enforced.

- Liability: If AI models and tools are used by third parties to do harm, policy must establish whether developers or providers of the technology bear any liability.

- Employment: Policy makers must anticipate and manage the disruption AI will do to the jobs that people do and that are available to be done.

- International coordination: The development of governance frameworks to regulate the use of AI must be an international undertaking.

- Existential: Some people think that AI is a major threat to human life. If that is a possibility, governance needs to provide protections for national security.

In its summary of the report, which can be read in full on the UK Parliament website, the SITC says “AI models and tools are capable of processing increasing amounts of data, and this is already delivering significant benefits … However, they can also be manipulated, provide false information, and do not always perform as one might expect in messy, complex environments – such as the world we live in.

“The challenges highlighted in our interim Report should form the basis for discussion, with a view to advancing a shared international understanding of the challenges of AI—as well as its opportunities.”

US, UK and China

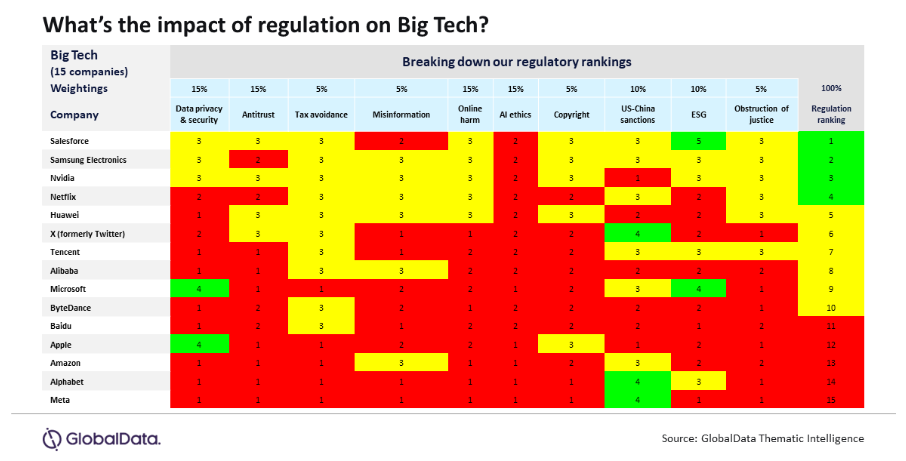

The US and EU seem to be ahead of the UK on regulation. For its part, the Chinese government must strike a delicate balance: maintaining regulatory oversight of the tech sector while innovating fast in the technologies most under pressure from the US, according to GlobalData.

“Ad-funded internet companies treating data as a free resource – like Meta, Alphabet, Amazon, and Baidu – face the highest regulatory risk. While Microsoft and Apple are less at risk from data privacy issues, they are both highly exposed to antitrust regulation and meet the criteria (alongside Amazon, Meta, and Alphabet) to qualify as ‘gatekeepers’ under the EU’s new antitrust legislation,” says Laura Petrone, Principal Analyst at GlobalData.

“All companies investing in AI will face significant scrutiny by regulators in AI ethics, but Big Tech again has the most at stake. The EU’s AI Act has the potential to hold providers of foundation models, which create content from limited human input (eg, ChatGPT), accountable for assessing and mitigating possible risks. Should the US and the UK align with Brussels’ approach, companies like OpenAI, Microsoft, Alphabet, and Meta will be deemed responsible for how their systems are used, even if they have no control over specific applications of the technology.”

The Ada Lovelace Institute raised concerns in July about UK plans to become an AI powerhouse, saying “large swathes of the UK economy are currently unregulated or only partially regulated. It is unclear who would be responsible for implementing AI principles in these contexts”.

Meanwhile, DeepMind co-founder Mustafa Suleyman, now chief executive of AI startup Inflection, told the Financial Times in an interview that the US should move to leverage its leadership in the semiconductors market to create a “choke point” to enforce minimum standards in AI.

Nvidia chips

He said that the manufacturers of Nvidia chips should be required to sign up to a set of standards on the safe and ethical use of the technology. These would fit in with the voluntary undertakings made by seven leading tech companies to the US White House in July.

Suleyman also wants those developing AI technology to shoulder “the burden of proof” to show the consequences of the tech they are developing will not be harmful. “We’ll want to evaluate what the potential consequences are rather than doing it after the fact” he said, referencing the development of social media as an example of how not to let matters develop.

He also warned of the dangers of being distracted by a focus on superintelligence – the stuff of many a science fiction fantasy – rather than the consequences of more practical but less eye-catching developments.