The UK Information Commissioner’s Office (ICO) has published the first phase of its guidance on biometric data for public consultation. Here we set out the essential elements from the draft guidance for controllers and processors of biometric data.

What is biometric data?

The ICO describes biometric data in its draft guidance as personal data which:

- relates to an individual’s behavior, appearance or observable characteristics (such as fingerprints, face or voice);

- has been extracted or analysed using technology; and

- can uniquely identify the person it relates to.

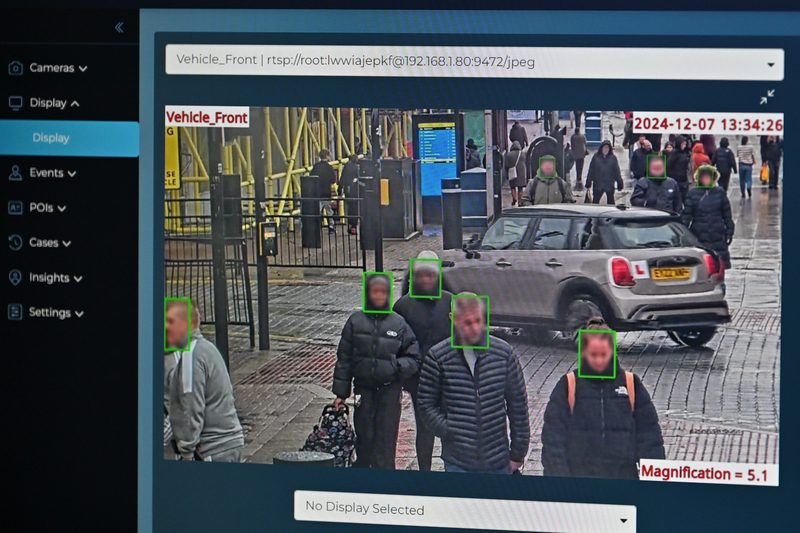

The risks raised with using biometric data are that it can be discriminatory, inaccurate, and pose a security risk. The ICO further notes in its draft guidance that technologies which use AI and biometric technologies are particularly susceptible to bias.

What does the guidance cover?

The draft guidance explains how data protection law applies when using biometric data in biometric recognition systems. The ICO describes these systems as ones which identify and verify biometric data. In other words, these are systems which ask the question “who is this person and are they who they claim to be?”

In particular, the guidance covers what biometric data is, when it is considered special category data, its use in biometric recognition systems and the data protection requirements to comply with. The guidance is for organizations that use or are considering using biometric recognition systems including both controllers and processors.

It does not cover data protection requirements for law enforcement or security services.

What are the risks?

- Complying with data protection law – data protection law must be complied with because biometric data is a type of personal data.

- Explicit consent – explicit consent is likely to be the only valid condition for processing special category biometric data (which is biometric data used for the purpose of uniquely identifying an individual). If explicit consent cannot be obtained, and no other condition is appropriate in the circumstances, then processing the data becomes unlawful.

- ‘Data protection by design’ approach – a ‘data protection by design’ approach must be taken when using biometric data. This means that data protection and privacy issues must be considered upfront at the design stage and throughout the lifecycle of the biometric recognition system.

- DPIA – a Data Protection Impact Assessment (DPIA) is highly likely to be required due to the high risk posed to individuals’ rights and freedom when using a biometric recognition system.

- Addressing accuracy risks – the ICO states that well-developed systems should be used to minimize the number of errors occurring.

- Dealing with the risk of discrimination – users of biometric recognition systems must assess whether the system is likely to have a discriminatory impact on people.

- Handling security risks – appropriate security measures must be applied and a risk analysis should be carried out. The draft guidance also states that biometric data must be encrypted and regular testing of security measures must be carried out.

Last year, Burges Salmon published an article on the ICO issuing a data protection fine of more than £7.5m ($9.5m) to a facial recognition firm for breaching UK data protection laws. With fines as high as this and with the ICO having issued a warning about “immature biometric technologies” potentially discriminating against individuals, it will be essential for controllers and processors to take note of the new draft guidance on biometric data.

For the second phase of the guidance (biometric classification and data protection), there will be a call for evidence early next year. In the meantime, the first phase consultation will run until October 20, 2023.

David Varney is a partner in the technology team and advises on a range of data protection, technology, intellectual property and commercial matters and Emily Fox is a trainee solicitor in the data protection team, Burges Salmon.