UK regulator the FCA is examining the possibility of making the market data it has collected available to vendors of financial crime surveillance technology. The move comes amid worries about the rapid growth of AI increasing the dominance of big tech incumbents over smaller competitors and new entrants to the market.

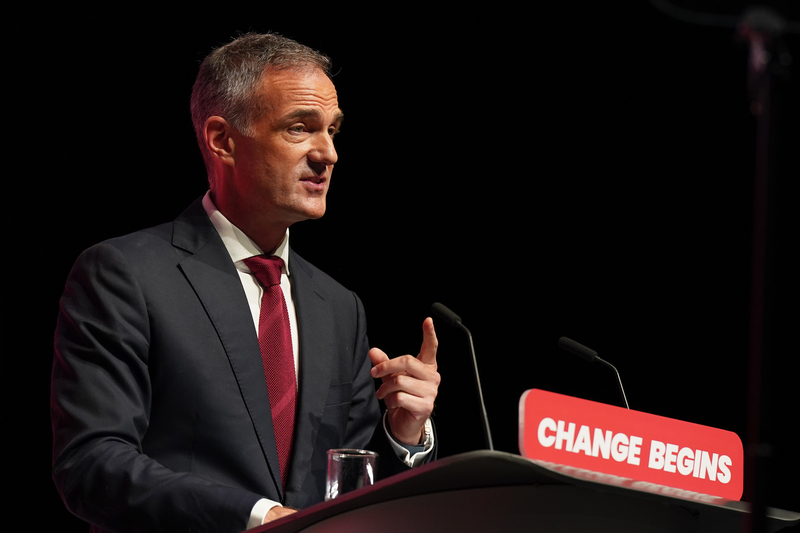

Speaking at Risk.net’s Op Risk Europe conference, Jamie Bell – the FCA’s Head of Secondary Market Oversight, said: “One of the problems that I see emerging for AI is competition, because who has access to sufficient training data becomes a really important point. And there’s a very real danger that AI will drive consolidation and anti-competitive behaviours in the industry – [and] as it happens, that’s particularly true in market surveillance.”

He continued: “We ingest half a billion trading records a day – we have data going back to 2000 at least that’s very, very rich – and I’d like to make some of that data available to help smaller vendors and academics, frankly to [help them] compete in this space.”

“We ingest half a billion trading records a day and I’d like to make some of that data available to help smaller vendors and academics.”

Jamie Bell, Head of Secondary Market Oversight, FCA

Rob Mason, Director of Regulatory Intelligence at our parent company Global Relay, said: “This is a massive development for vendors and particularly exchanges, whose market data is the main generator of their income.” But, he warned: “There are also material risks attached to it – even anonymised data has the potential to be unpicked by high-frequency trading tech teams who may be able to copy successful trading strategies that may still be running. It also seems likely that surveillance firms may be able to identify market abuse which has previously been missed by the FCA.”

Bell, in the speech, acknowledged these challenges, saying that providing access to the FCA’s datasets throws up “very significant confidentiality challenges” and admitting “What we absolutely don’t want to happen is for people to able to reconstruct firms’ trading records going back 10 years, if we’ve done it wrong”.

Confidentiality

Some industry observers we spoke to wondered just how much help the FCA’s narrow, specialist datasets would be to the smaller firms currently moving into the regulatory space thanks to the availability of generative AI. Mason reckons the FCA “has been wanting to share data to support development of better tools all round”. But, he said, the regulator is “limited by confidentiality and tends to agonise as policing it even in a sandbox is difficult”.

He pointed out that “no one has got a big data set to run trade surveillance model testing on (unless you pay for it from an exchange)” so the FCA may simply be making a logical move. But, he said: “Generally, this does not look like a well-thought through strategy. It may breach confidentiality and upset a number of participants, so it will need to be followed carefully.”

“It’s possible the FCA could offer an anonymised sandbox in which to test, but with limitations so that data cannot be exported – though that limits the value of it.”