The SEC has rejected requests from Apple and Disney to omit shareholder votes in upcoming annual meetings about their deployment of artificial intelligence.

The proposals had been filed by the American Federation of Labor and Congress of Industrial Organizations, the union behemoth known as the AFL-CIO, which sent similar shareholder

Register for free to keep reading

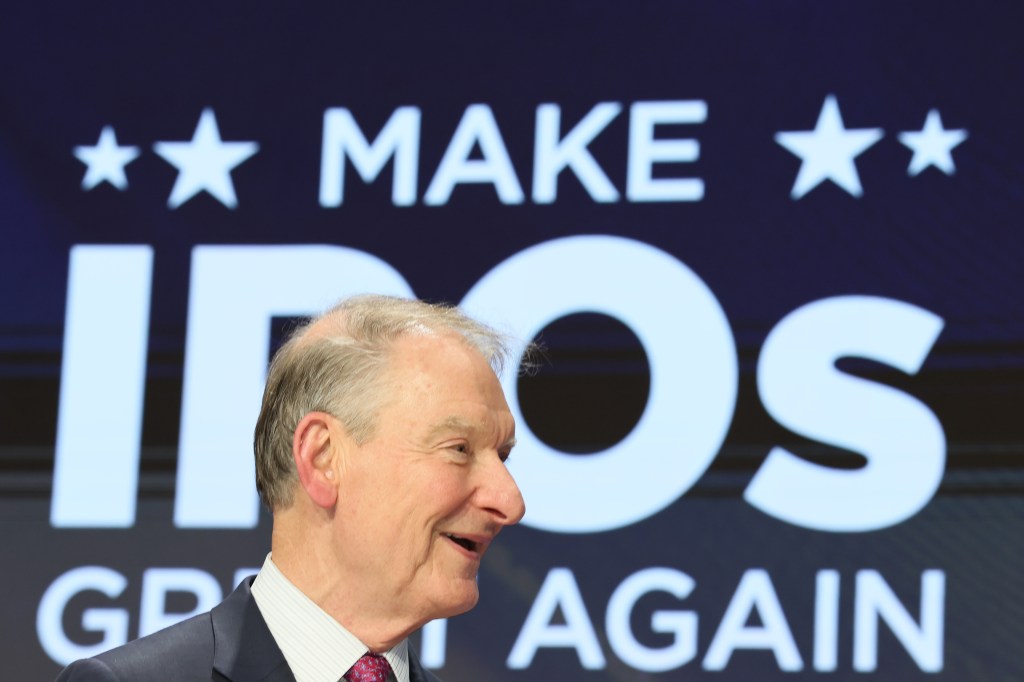

To continue reading this article and unlock full access to GRIP, register now. You’ll enjoy free access to all content until our subscription service launches in early 2026.

- Unlimited access to industry insights

- Stay on top of key rules and regulatory changes with our Rules Navigator

- Ad-free experience with no distractions

- Regular podcasts from trusted external experts

- Fresh compliance and regulatory content every day