The Netherlands is in urgent need of a better AI risk management and incident monitoring, the Dutch Data Protection Authority Autoriteit Persoonsgegevens (AP) says.

The AP’s second AI and Algorithmic Risks Report highlights growing AI and algorithm risks, especially with the rise in generative AI. The report also identifies problems with disinformation, privacy violations and discrimination – incidences of which are increasing.

“The advance of generative AI puts additional pressure on the development of effective safeguards”, AP states, saying the overarching risk profile mandates a call for action.

“The more AI and algorithms are being used in society, the more incidents seem to occur. This demonstrates the need for better short-term risk management.”

Aleid Wolfsen, Chair, AP

To handle the issues – and to work towards the aim of making the Netherlands a global leader for careful control of AI and algorithms – the AP recommends a national masterplan for 2030 to include human control and oversight, secure applications and systems, and strict rules to ensure that organizations are in control of their AI usage.

Dutch masterplan

The AP says the comprehensive Dutch strategy should include set goals and agreements for each year, and include the implementation of regulations like the AI Act.

The authority also recommends focus on these five elements:

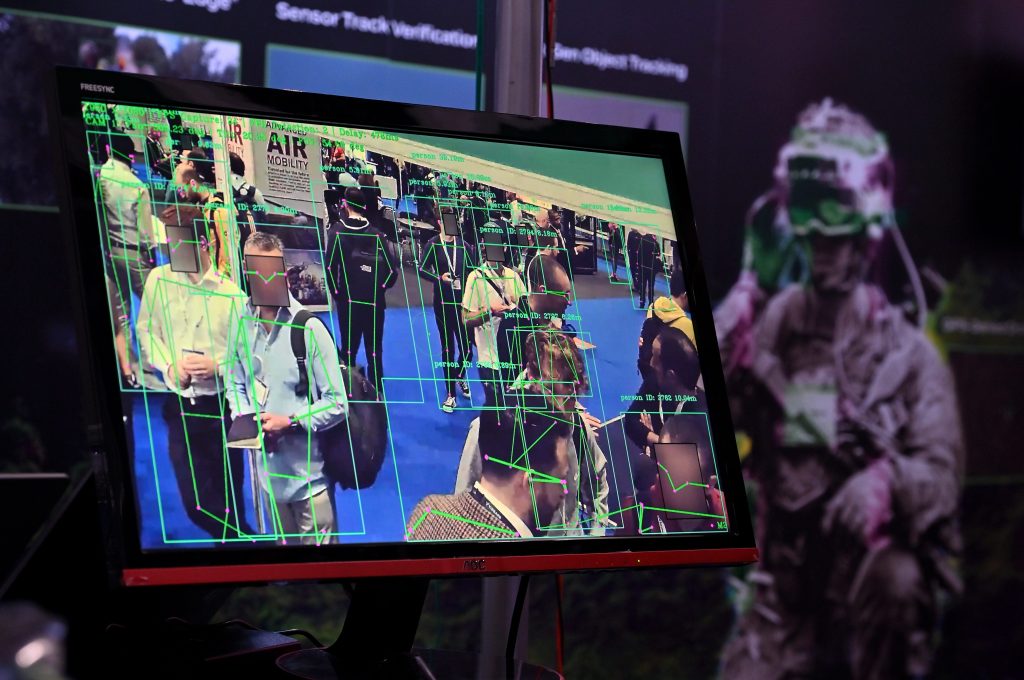

- (1) Human control – So that people have sufficient knowledge to safely use algorithms and AI, and are sufficiently protected against the risks of it.

- (2) Secure applications and systems – So all impactful applications and systems that are using AI and algorithms are safe, including safety with regard to fundamental rights and public values.

- (3) Organizations in control – So that organizations are fully – and at all stages – in control of the use of AI and algorithms in their processes, and understand the consequences of their application.

- (4) National ecosystem & national infrastructure – So that AI and algorithms contribute to Dutch welfare, wellness and stability in a safe way.

- (5) International standards and cooperation – To address global interconnectedness in AI-systems through global standard setting and supervision; and alignment of EU AI’s Act with European Charter.

“We also know that many risks and incidents remain under the radar. The use of AI and algorithms can contribute to sustainable prosperity and well-being. And it’s possible to do this in such a way that fundamental rights are well protected. But incidents undermine our trust in algorithms and AI. Adequate regulation and robust supervision are therefore necessary conditions,” said Aleid Wolfsen, Chair of the AP.

“The use of AI and algorithms can contribute to sustainable prosperity and well-being … But incidents undermine our trust in algorithms and AI.”

Aleid Wolfsen, Chair, AP

Even though the Dutch government has set out initiatives such as the 2022 Work Agenda Value-driven Digitalization [Werkagenda Waardengedreven Digitaliseren] and the 2019 Strategic Action Plan for Artificial Intelligence [Strategisch Actieplan voor Artificiële Intelligentie], the AP still says that more needs to be done to face challenges and in preparation for the masterplan.

“The Dutch government should take the next step through enriching and bringing these initiatives together and combine them with the latest developments and regulations, such as generative AI and the upcoming AI Act.”

AI and algorithms

Today, 40% of the larger companies in the Netherlands have an AI system in place, and more than 75% of all companies are believed to be planning to use AI applications in the workplace in the next five years.

About half of all Dutch people are familiar with generative AI, two-thirds of consumers find it useful to receive medical advice through it, and seven of 10 trust the text and output that has been created with generative AI.

Still, even though much work and production optimization can be achived with AI, a rising number of Dutch citizens are becoming more and more negative about the technology. For the first time since 2019, people now believe that algorithms for society are bad (26 %) rather than good (22 %) – which goes hand in hand with the increasing awareness of algorithms. In 2019, around 45% knew about algorithms – a figure that has now risen to 70%.

“The more AI and algorithms are being used in society, the more incidents seem to occur. This demonstrates the need for better short-term risk management. With 75% of organizations in the Netherlands seeking to use AI in workforce management in the near future, workers need to be protected from negative effects,” Wolfsen added.