In this AI roundup, we look at how chatbots that send customers into “doom loops” could be outlawed and how the Commerce Department plans to add security to the AI supply chain.

Plus, and this is not a joke, we look at how OpenAI has just acknowledged that its humanlike voice interface for ChatGPT may lure some users into becoming emotionally attached to their chatbot.

Let’s start with that latter item.

OpenAI’s safety analysis

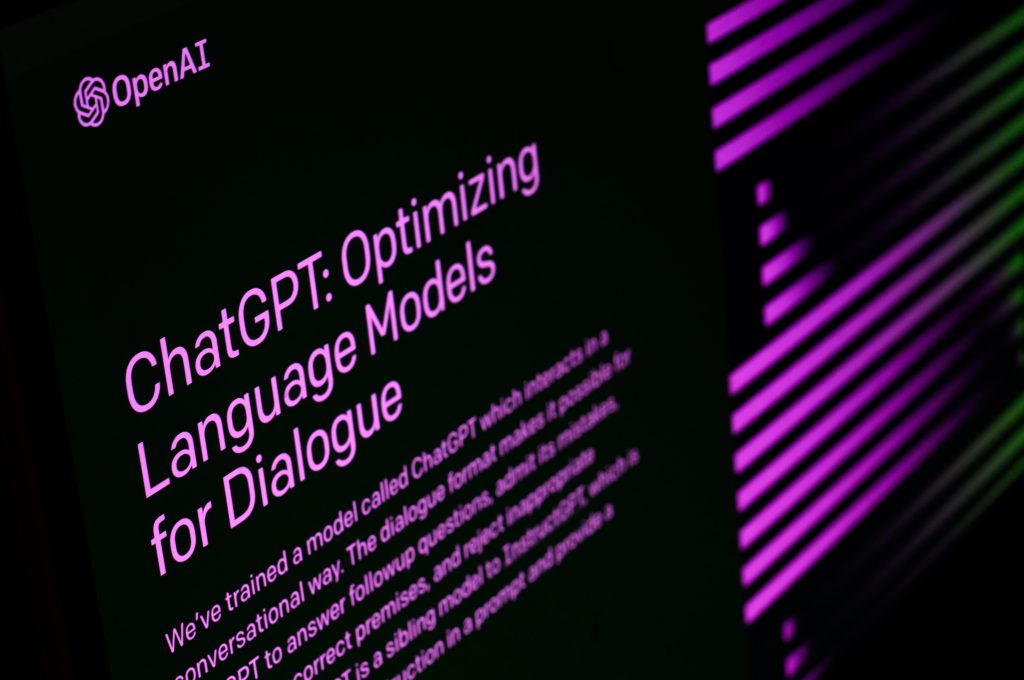

OpenAI rolled out its GPT-4o in July; in doing so, the company also issued a “system card” for GPT-4o – a technical document that lays out what the company believes are the risks associated with the model. Plus it offered details surrounding safety testing and the mitigation efforts the company is taking to reduce potential risk.

It did it because this updated ChatGPT has an eerily humanlike voice interface, and a safety analysis conducted by the business showed that the tool’s anthropomorphic voice may lure some users into becoming emotionally attached to their chatbot.

Here’s want the note said. “Anthropomorphization involves attributing human-like behaviors and characteristics to nonhuman entities, such as AI models. This risk may be heightened by the audio capabilities of GPT-4o, which facilitate more human-like interactions with the model.”

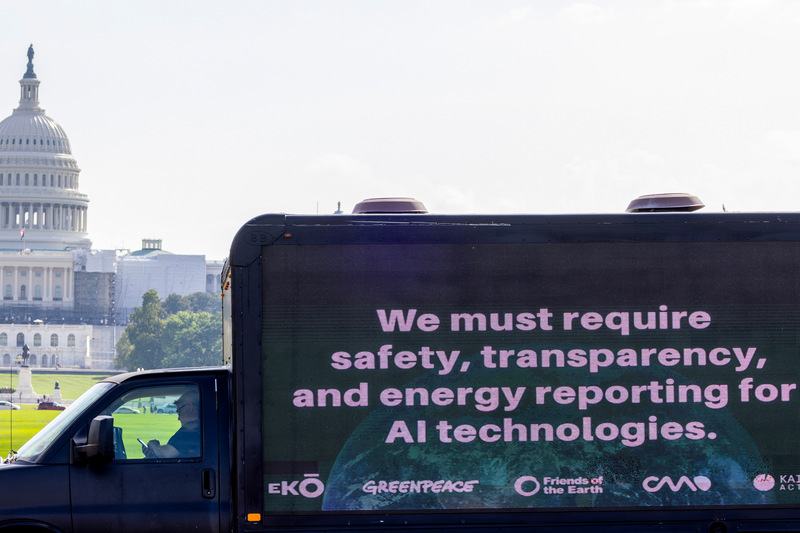

The company might have felt some pressure to provide such an analysis in a comparatively bold statement about an unsafe, emotional attachment with a chatbot because a number of employees have left OpenAI after raising concerns about AI’s long-term risk. They feel their concerns were not being fully heeded. Lawmakers, too, have expressed their concerns over the risks posed by the technology.

Although disclosing details of OpenAI’s safety regime could help reassure the public the company takes consumer safety seriously, it’s unique and striking when you consider how we’d view such a stark risk warning from another product or service provider.

The other risks explored in the new system card include the potential for GPT-4o to amplify societal biases, spread disinformation, and aid in the development of chemical or biological weapons.

White House directs CFPB to tackle chatbot ‘doom loop’

The Biden administration is asking the Consumer Financial Protection Bureau (CFPB) to look at banks’ use of chatbots and to address customer service “doom loops” as part of a wider effort to prioritize customers’ time.

Doom loops are those endless menu options and really long automated recordings you need to listen to when you call a customer support number before getting to any live representative, if indeed such a person exists.

To tackle these “doom loops,” the CFPB will initiate a rulemaking process that would require companies under its jurisdiction to let customers talk to a human by pressing a single button. The Federal Communications Commission will launch an inquiry into considering similar requirements for phone, broadband, and cable companies.

The Health and Human Services Department and Department of Labor will similarly call on health providers to make it easier to talk to a customer service agent.

Commerce addresses AI supply chain security

The Biden Administration recently announced that the US Department of Commerce and tech company SK hynix have signed a non-binding preliminary memorandum of terms to provide up to $450m in proposed federal incentives. The move comes under the CHIPS and Science Act and is intended to establish a high-bandwidth memory-advanced packaging fabrication and research and development facility.

President Biden signed the bipartisan “Creating Helpful Incentives to Produce Semiconductors and Science Act” in 2022 to support and incentivize semiconductor manufacturing in the United States.

The proposed CHIPS investment builds upon SK hynix’s investment of approximately $3.87 billion to build a memory packaging plant in Indiana for artificial intelligence products and an advanced packaging R&D facility, creating about 1,000 new jobs and filling a critical gap in the US semiconductor supply chain.