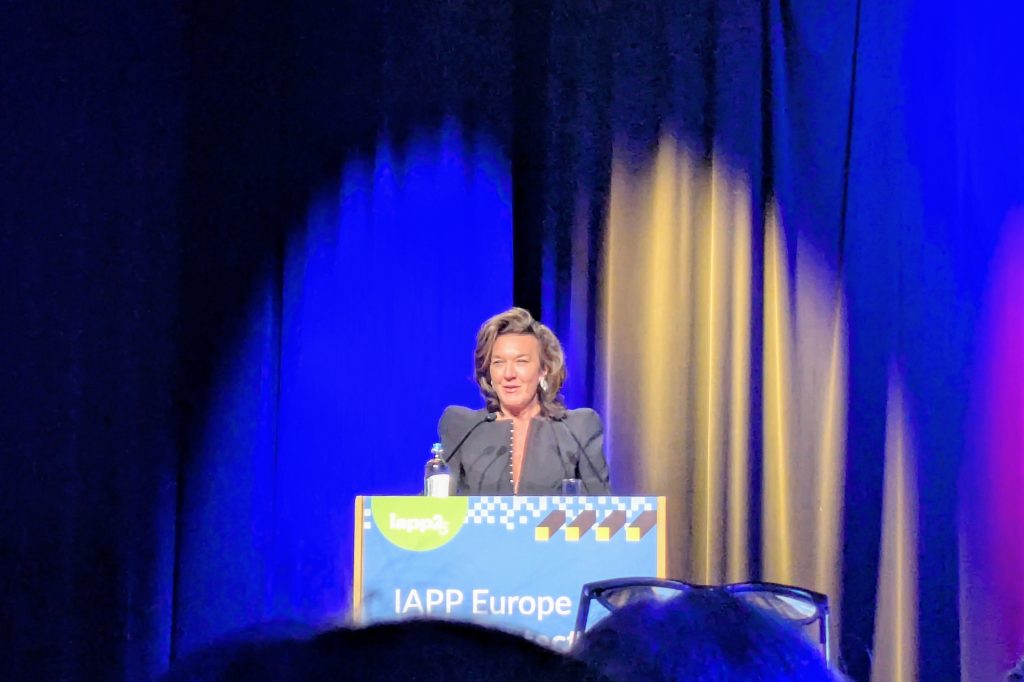

The question of how to manage personal data and delineate between the individual and the corporate has been forced centre stage by technological development. Global Relay President and General Counsel Shannon Rogers takes a look at what brought us here and where we need to go.

“But it’s clear now

Register for free to keep reading.

To continue reading this article and unlock full access to GRIP, register now. You’ll enjoy free access to all content until our subscription service launches in early 2026.

- Unlimited access to industry insights

- Stay on top of key rules and regulatory changes with our Rules Navigator

- Ad-free experience with no distractions

- Regular podcasts from trusted external experts

- Fresh compliance and regulatory content every day