The Japanese Government has announced its intention to position Japan as “the most AI-friendly country in the world”, with a lighter regulatory approach than that of the EU and some other nations. This statement follows the Japanese government’s recent submission of an AI bill to Japan’s Parliament, and the Japanese Personal Data Protection Commission’s (PPC) proposals to amend the Japanese Act on the Protection of Personal Information (APPI) to facilitate the use of personal data for the development of AI.

On February 28, 2025, the Japanese Government submitted its Bill on the Promotion of Research, Development and Utilization of Artificial Intelligence-Related Technologies (AI Bill) to Parliament. If enacted, it would become Japan’s first comprehensive law on AI.

The AI Bill only imposes one obligation on private sector entities utilizing AI-related technology: they must “cooperate” with government-led initiatives on AI. This obligation is expected to apply to entities that develop, provide, or use AI-based products or services in connection with their business activities. It is currently unclear what this obligation to “cooperate” entails.

Develop guidelines

By contrast, the AI Bill requires the government to develop AI guidelines in line with international standards, and collect information and conduct research on AI-related technologies. Therefore, it is likely that private-sector entities will be expected to comply with these government-issued guidelines and cooperate with government-led data collection and research initiatives.

Regarding its geographic scope, the AI Bill does not explicitly provide for its application to companies outside of Japan. However, given that the Japanese government has emphasized the importance of applying the AI law to foreign companies in its “Interim Summary” on AI regulation, it is possible that this obligation could be extended to companies operating outside of Japan.

The AI Bill does not provide penalties for non-compliance, moreover. However, the AI Bill requires the Japanese Government to analyze cases in which the improper or inappropriate use of AI-related technologies has resulted in harm to the rights or interests of individuals, and to take necessary measures based on its findings. Therefore, in particularly egregious cases where such violations of individual rights and interests occur, it is likely that the Government will take measures, such as issuing guidance for adoption by the relevant entity or publicly disclosing its name.

Amending Data Protection Law

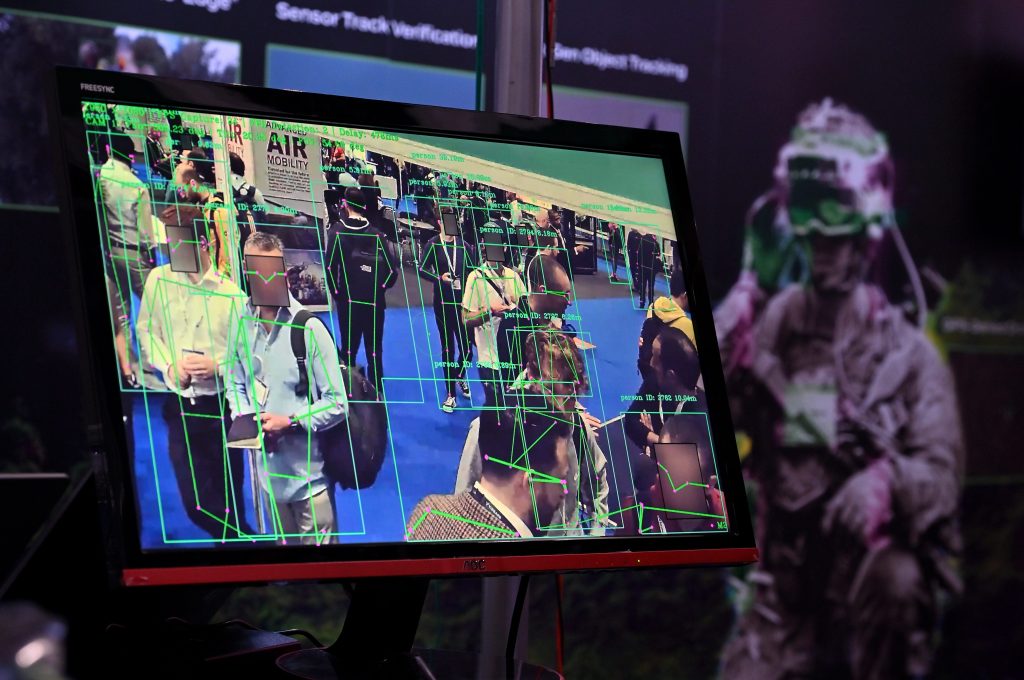

On February 5, 2025, the PPC proposed introducing exemptions to the requirement under the APPI to obtain a data subject’s consent when collecting sensitive personal data (for example, medical history or criminal record), and when transferring personal data to third parties.

The PPC is of the opinion that if personal data is used to generate statistical information or to develop AI models and the results cannot be traced back to specific individuals, the risk of infringing the rights and interests of individuals is low. Therefore, the PPC suggested that AI developers should be able to collect publicly available sensitive personal data without obtaining the consent of data subjects if the developer ensures that the personal data will only be used for the development of AI models. The PPC also noted that data controllers should be able to make such data available to AI developers without requiring the consent of the data subject.

While a specific draft amendment to the APPI has yet to be announced, the PPC’s proposals are a notable move toward the promotion of AI development through the relaxation of data protection restrictions.

Authors: Daniel Cooper is co-chair of Covington’s Data Privacy and Cyber Security Practice; Matsumoto Ryoko; and Anna Sophia Oberschelp de Meneses is an associate in the Data Privacy and Cybersecurity Practice Group.