Artificial intelligence (or indeed any newly emerging technology), and the practice of regulating it, are linked in a cat and mouse relationship. Technology, and the people who develop and deploy it, are the initiators, the creative force in this union. Regulators, in most cases, sit back, waiting and watching, and react when it is time to do so.

There is an argument that regulators in the EU have a far greater appetite for taking action than elsewhere, especially when dealing with AI. The issue is that, in certain cases, they can easily end up over-regulating the technology. And this over-regulation, some experts argue, could be the reason why the EU is lagging behind other regions on its AI journey.

“While AI technologies bring about benefits, they also pose significant risks”

Dr Huw Roberts, Oxford University

The latest such remarks have come from Christian Klein, the head of SAP, which is generally considered a major powerhouse in the EU’s software market. He recently told the FT that the EU’s approach to regulating AI is undermining the development of the technology.

Klein believes it is unwise to regulate the technology at all, and that regulatory focus should be placed on the outcome or the end-user. He does, however, agree that Europe is starting to have useful discussions on the subject.

The European bloc spent plenty of time on discussions, consultations and negotiations around the subject of AI regulation. The EU AI Act is a direct result of those discussions. It is a comprehensive piece of legislation which has now come into full force across the bloc and is considered to be “the world’s first comprehensive AI law.”

EU approach to AI regulation

The EU AI Act is generally considered to be “a harmonised legal framework ‘for the development, the placing on the market, the putting into service and the use of artificial intelligence systems’ in the EU.”

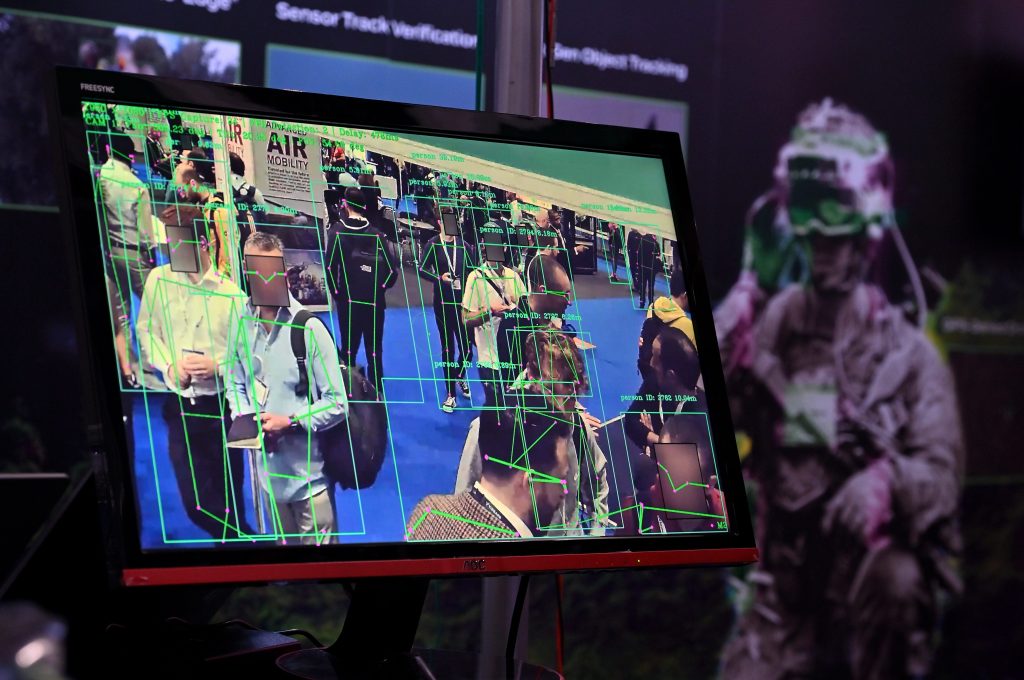

At its heart, it takes a risk-based approach to AI regulation, with the intention of banning and preventing the development and deployment of technologies that are considered “harmful, abusive and in contradiction with EU values.”

The Act categorizes AI systems on the basis of the degree of risk they can pose to individuals and the society. The compliance requirement also depends on the level of the risk being posed. An article by White & Case explains the general categories.

- Unacceptable risk: These AI systems are banned outright, simply because their development and deployment is considered unacceptably dangerous.

- High risk: These systems and their developers are required to be registered on an EU database before they can operate or be deployed in the EU. They also have to comply with an extensive list of requirements related to “data training and data governance, technical documentation, record-keeping, technical robustness, transparency, human oversight, and cybersecurity.”

- Limited risk: These AI systems and their providers are generally subject to transparency obligations.

- Low risk: These systems are usually exempt from any specific obligations or requirements.

- General purpose AI models: The compliance requirements for these systems depend on whether they pose a ‘systemic risk’ or not.

Non-compliance with the EU AI Act could lead to serious consequences and penalties for AI developers and providers. These depend on the severity of non-compliance, and can result in firms and developers having to pay “a maximum financial penalty of up to €35m or 7% of worldwide annual turnover, whichever is higher”, according to White & Case.

The EU’s approach to AI in general revolves around three key factors; innovation, safety and trust. The European Commission says it wants the technology to develop and flourish for the purpose of serving individuals and the society. However, it has also reiterated that any technology or system should be safe and trustworthy, and should not harm or threaten the safety and fundamental rights of European citizens.

Argument against the EU’s approach

Experts have highlighted certain limitations in the EU’s ‘horizontal’ approach to AI regulation. Some commentators and critics suggest that the issues with the approach being taken are connected to the EU’s inability to adapt and update the AI Act swiftly enough to be able to keep pace with advancements in technology. Others argue that it is the EU’s risk-based approach that hinders the development of the technology.

According to Oxford University’s Dr Huw Roberts, the EU’s horizontal approach exempts earlier, simpler models from regulatory scrutiny, considering them “safe.” However, as those simple models are quickly developed, their complexity and capabilities increase very rapidly to a point where they are capable of actually causing significant harm. Take Chat GPT which, at its core, helps users create text. The text can be very basic, like a football chant. But as the capabilities of the system improve rapidly that same model can be utilized to create text that is both extremely complex and individually targeted, which then can be used in potential phishing attacks.

Another limitation of the EU’s approach is that it restricts the use of data for training AI models. Data is key for developing large language models (LLMs) that can carry out a variety of tasks. Without proper access to such data it becomes far more difficult to take more basic LLMs to the next level. As Christian Klein says in his FT interview: “If we over-regulate using data for developing new AI in Europe, but [in the US] it is still OK, then you’re at a massive disadvantage.”

Klein’s argument is that it’s far easier to train and develop LLMs in the US because of the availability of data, coupled with the more lenient regulatory approach by US authorities towards the use of that data.

Way forward for the EU

It is possible to suggest that the regulators and legislators in the EU generally feel more comfortable with the bloc’s current ‘human-centric’ approach towards AI. As Dr Roberts argues: “While AI technologies bring about benefits, they also pose significant risks.” And it is that potential risk of AI to individuals and the society that is currently the main driver in the shaping and influencing of the European regulation in this area.

Many end users of AI technologies appear to be comfortable with this approach. After all, the general rule of thumb has been ‘safety first’ for a long time now.

And the EU has established platforms for cooperation and alignment with the US on AI development, deployment and regulation. For example, the EU-US Trade and Technology Council aims to help ‘coordinate approaches to key global issues, including technology’. Both sides have “reaffirmed their commitment to a risk-based approach to AI and support for safe and trustworthy AI technologies, which will help find solutions to global challenges.”

But those who work on the development side of the technology, both individuals and firms, remain very frustrated with the EU’s perceived caution. They feel strongly that innovation in this area is now happening elsewhere and that the EU rules are simply putting homegrown technologies (and technologists) at a significant competitive disadvantage vis-a-vis other jurisdictions that are more willing to permit entrepreneurs and companies to take risk in the hope of a breakthrough.

The challenge for the bloc may well be to find a middle ground.