The SEC held its first roundtable exclusively focused on artificial intelligence in the financial industry on March 27, an event held live at its Washington, DC headquarters and streamed live on video.

The roundtable specifically focused on industry participants’ priorities and concerns and featured almost no guidance from regulators about their specific plans for new regulations and enforcement priorities in this space.

Panelists included the heads to strategic initiatives and governance in the AI space at institutions including JPMorgan Chase & Co, BlackRock, Nasdaq, Citadel Securities, Edward Jones, Broadridge, Charles Schwab, Vanguard Group, Morgan Stanley and Amazon Web Services.

Members of academia (American University Law, UPENN’s Wharton School, the Massachusetts Institute of Technology, and the University of Michigan) and a few regulatory agencies (mainly as moderators) from FINRA, the SEC, and the US Treasury Department were also included.

SEC Acting Chair Mark Uyeda said about the SEC’s approach to AI oversight in kicking off the event last Thursday in his introductory remarks: “We should avoid an overly prescriptive approach that can lead to quickly outdated, duplicative rules, a “check the box” approach to compliance, and impediments to innovation.”

“To foster a commonsense and reasoned approach to AI and its use in financial markets and services, regulators should be engaging with innovators, technology providers, market participants, and others,” he added.

SEC Commissioner Caroline Crenshaw, the lone Democrat on the SEC for now, referred back to a rule proposed in July 2023 on Predictive Data Analytics by Broker Dealers and Investment Advisers in her prepared remarks. It attempted to address how financial professionals dealt with conflicts of interest in light of certain emerging technologies that they might be using in their interactions with investors.

Many industry participants, she recalled, felt the scope of the proposal was not appropriate, as it was viewed as too broad in its definition of covered technology and perhaps overlapping in much of its application with Reg BI and an investment adviser’s fiduciary duties. She said the AI roundtable event was a chance to avoid that mistake again, because “when we speak about these technologies, I think we have a tendency to talk past each other.”

Balancing innovation with governance

At the core of the event was the discussion around the need for regulators’ oversight to now include AI-driven decision-making. The question remains how explicit this should be in terms of client disclosures and the transparency around the use and development of algorithms.

Industry participants did not shy away from the need for copious documentation of one’s use of AI and many of them stressed the importance of their role in educating clients to reduce risk. They also felt regulators should prioritize investor education in this regard.

64% of respondents in a recent survey on AI use in financial services reported having an executive committee focused on AI governance – or in the process of defining one

But many of them felt that existing rules could be enough, such as current fiduciary and best execution ones, and they mentioned ever-evolving and well-regarded technology and security standards offered by the National Institute of Standards and Technology (NIST) and the International Organization for Standardization (ISO) as helpful tools in terms of best practices and benchmarks.

AI use by financial services firms has revolved significantly around the analysis of market patterns, in more efficiently and quickly executing trades and in unearthing more investment strategies. And firms can raise back-office efficiency with generative AI by helping streamline clearing and settlement operations and adding efficiency to human resources functions, they noted.

Appreciating the risk

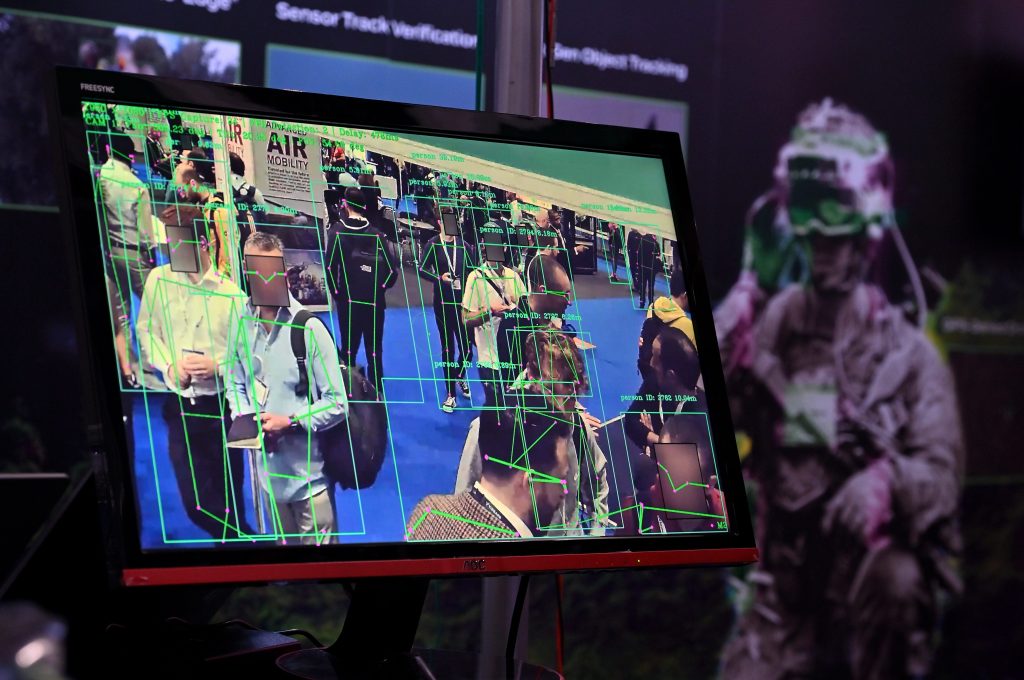

Some risks associated with AI are internal, while others are external. Although AI-enhanced anti-money-laundering tools are performing well, malicious actors are increasingly using AI to bypass authentication protocols and threaten firms with more and more realistic-looking deepfake videos and images to perpetrate fraud.

As the technology takes on more roles at a company, traditional risk management frameworks may be insufficient, the panelists noted.

Peter Slattery leads MIT’s AI Risk Repository, which is updated every three months, with the most recent risk report including 100 new risks related to AI agents; indeed, MIT started a new category of multi-agent risks to track this trend.

The panelists often referred to Agentic AI (also called agent AI or autonomous AI), a type of artificial intelligence that runs independently to design, execute, and optimize workflows. AI agents can make decisions, plan, and adapt to achieve predefined goals. with little to no human intervention

Developers already cannot understand what’s going on inside “black box” AI models of AI, whose internal workings and decision-making processes are opaque, making it difficult to know how the model arrives at its output.

“Many financial services companies are adopting technology at a much slower rate than that of tech companies developing and selling the technology.”

Hardeep Walia, MD, head of AI and personalization, Charles Schwab

Tyler Derr, chief technology and product officer at Broadridge emphasized that risk policies and procedures must be dynamically updated. “It’s not a static process,” he said. “You’re going to have to continue to evaluate it as new use cases come up.”

What concerned most of the industry participants was whether AI would primarily be used in client-facing functions and investment decisions, without enough of a human being the loop.

But none of them felt like that was happening on a large scale now – mainly because AI adoption by firms has been somewhat exaggerated.

The truth is that “many financial services companies are adopting technology at a much slower rate than that of tech companies developing and selling the technology,” said Hardeep Walia, managing director and head of AI and personalization at Charles Schwab.

And Gregg Berman, director of market analytics and regulatory structure at Citadel Securities, pointed out that humans in customer service have made mistakes for decades, and we have always been able to spot and rectify them. Using AI tools is much the same, he said. But Hillary Allen, professor of law at American University Law, pointed out that fintech firm Klarna, after initially championing AI chatbots to handle the majority of its customer service volume, is now publicly emphasizing the importance of human agent interactions with customers.

Ironically, for the AI tool to perform better, it needs access to more and more data, as much of it rich in diversity as possible, but that requires businesses to share data with fintech firms.And customer data is not the type businesses like to share, even if they hear others are doing it and the process will make the tools more effective in the long run, Kristen McCooey, chief information security officer at Edward Jones noted.

The panelists emphasized the point that AI tools help the most highly skilled persons at an organization most of all, because they allow them to better focus their energy and talents

A number of the panelists noted that their firms – including Vanguard, The Depository Trust and Clearing Corporation, and Amazon Web Services – have AI committees or working groups that consider everything from the firm’s risk appetite with AI; how soon it needs the tool to be functional; the cost it will spend on it; and whether it makes more sense to build one in-house or not.

Indeed, Conan French, director of digital finance at the Institute of International Finance, said 64% of respondents in a 2023 survey on AI and machine learning use in financial services reported having an executive committee focused on AI governance – or said they are in the process of defining or aligning one.

And each business must assess whether it’s working well, which often takes your brightest people – or at least the ones who know the data, own the problem, or typically evaluate all outputs. So, no matter how much they push back on it, those are the folks you need to involve in these groups and discussions, cautioned Jeff McMillan, head of firmwide artificial intelligence at Morgan Stanley.

Risk-management hacks

Several of the panelists felt that existing approaches to mitigating the risk that technology presents could suffice for AI.

But Joanna Powell, managing director and head of technology, research and innovation at the Depository Trust and Clearing Corporation said consulting certain risk frameworks provided by well-known security standards, such as NIST’s AI Risk Management Framework and ISO 42001, offer helpful risk-management considerations.

Plus, businesses should consider looking to the European Union’s AI Act for ideas on what “good” looks like in terms of risk calibration, she said.

Sarah Hammer, executive director at the Wharton School, also mentioned AI for Good – which was established in 2017 by the International Telecommunication Union, the United Nations agency for digital technologies, as another source of helpful guidance – and the Bretton Woods and Rockefeller Foundation collaborative effort to publish thought leadership in the AI arena.

Peter Slattery of MIT FutureTech said a sandbox idea for innovation is a great idea; but reining in the risk here means keeping the sandbox on the periphery of the organization until you feel comfortable bringing it in.

Hammer said the use of generative AI for legal analysis is just too risky. “Even DeepSeek hallucinates,” she said. “So you really should already be a legal expert to use this technology for this purpose.”

Hammer and a few other panelists emphasized the point that AI tools help the most highly skilled persons at an organization most of all, because they allow those people to better focus their energy and talents.

“Generative AI cannot build a human relationship,” Hammer counseled. “And this is how we do business – through relationship-building.”