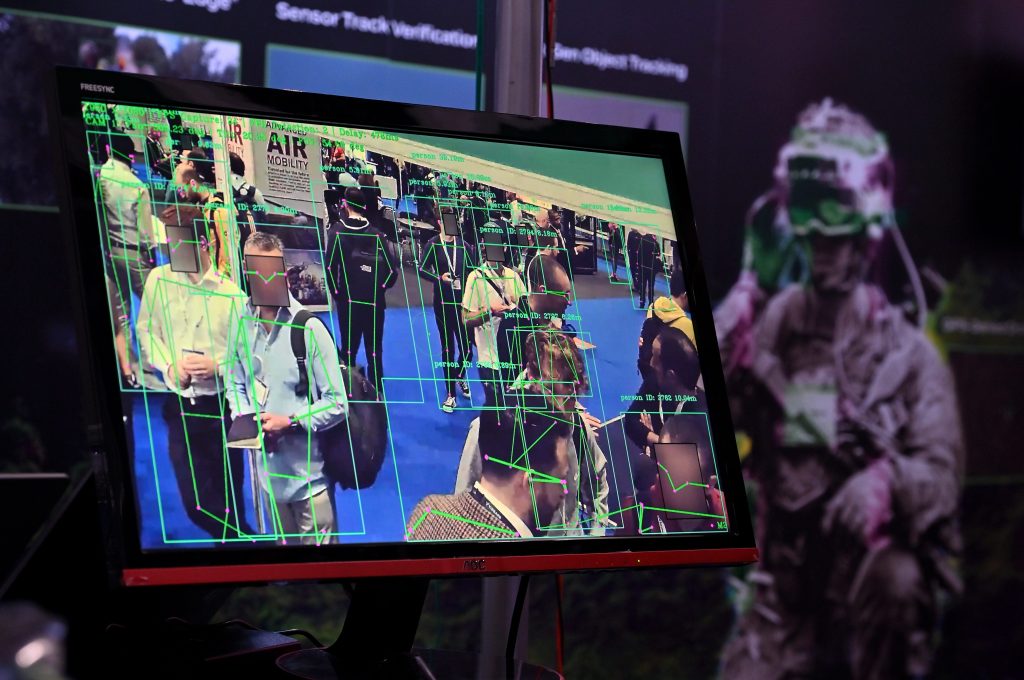

“Urgent action is needed to ensure that people are protected from the risks and harms of AI-powered decision making in the workplace, and that everyone benefits from the opportunities associated with AI at work.” That’s the view of the UK Trade Union Congress (TUC), the umbrella body representing around 5.5 million UK workers in 48 unions.

The TUC has put forward a legal blueprint in the form of an Artificial Intelligence (Regulation and Employment Rights) Bill. Measures set out in the Bill include requiring employers to consult workers before introducing AI systems for “high risk” decisions, establishing the right for workers and job seekers to get a human audit of decisions made by AI, and introducing a process for vetting AI tools bought off the shelf by outside suppliers.

The suggested Bill has been drafted by Robin Allen KC and Dee Masters of the AI Law Consultancy at Cloisters chambers, and comes out of the work of a taskforce set up in September 2023 by the TUC, the AI Law Consultancy and the Cambridge University Minderoo Centre for Technology and Democracy.

“Employers and businesses also need the certainty offered by regulation.”

Mary Towers, policy officer for employment rights, TUC

Input also came from an advisory committee that included representatives from the Ada Lovelace Institute, TechUK, the Alan Turing Institute, the CIPD, UKBlackTech and a number of individual trade unions.

Mary Towers, the TUC policy officer for employment rights, said: “Employers and businesses also need the certainty offered by regulation. And the more say workers have in how technology is used at work, the more rewarding and productive the world of work will become for us all.”

The Bill sets out some core concepts that are worth reproducing in full here.

- “The decision by an employer or its agent to deploy artificial intelligence systems for ‘high-risk decision-making’ is the trigger for most of the rights and obligations in the Bill.”

- “‘Decision-making’ means any decision made by an employer or its agent in relation to its employees, workers or jobseekers taken or supported by an artificial intelligence system.”

- “Decision-making is ‘high-risk’ in relation to a worker, employee, or jobseeker, if it has the capacity or potential to produce legal effects concerning them, or other similarly significant effects.”

So a high-risk decision could include the setting of performance ratings and bonuses, and testing for trainees as well as more basic decisions on whether to employ or continue to employ people.

Workplace AI risk assessment

A Workplace AI Risk Assessment (WAIRA) would be introduced, and under its terms employers would not be able to undertake a high-risk decision “until a WAIRA has risk assessed an artificial intelligence system in relation to health and safety, equality, data protection and human rights”. Further, “There will need to be direct consultation with employees and workers before high-risk decision-making occurs; the WAIRA will be central to that consultation”.

The draft goes on to say: “There will be a right to personalised explanations for a high-risk decisions which are or might reasonably be expected to be detrimental to employees, workers or jobseekers”, and: “Employees, workers or jobseekers will be entitled to a right to human reconsideration of a high-risk decision”.

The use of emotion recognition technology is prohibited if its use is deemed detrimental to a worker, employee or jobseeker.

Fears about discrimination being embedded in AI models that replicate existing attitudes are addressed by amending rights in the Equality Act 2010, tailoring them to the use of AI systems. These include ensuring employees are not made liable for discriminatory consequences of AI systems used by their employer, and allowing employers and their agents to successfully defend a discrimination claim if they did not create or modify an AI system that has been deployed.

“AI is already making life-changing calls in the workplace, including how people are hired, performance managed and fired.”

Kate Bell, assistant general secretary, TUC

The draft also introduces an automatic ‘right to disconnect’ – meaning the right not to check and answer emails outside work hours – and makes it automatically unfair to dismiss an employee through “unfair reliance on high-risk decision making”. And unions would have the right to view data about their members that has been collected by employers.

Some employer bodies worry that too broad a definition of “high-risk” could reduce the opportunity to cut costs and open up small businesses in particular to the risk of unintentionally breaking the law.

But Kate Bell, the TUC’s assistant general secretary, said: “UK employment law is simply failing to keep pace with the rapid speed of technological change. We are losing the race to regulate AI in the workplace.

“AI is already making life-changing calls in the workplace, including how people are hired, performance managed and fired. We urgently need to put new guardrails in place to protect workers from exploitation and discrimination. This should be a national priority.”