Collaboration, inclusion and diversity of thought are key to developing effective regulation that will allow financial services to get the best from the opportunities offered by Artificial Intelligence (AI). That was the message the FCA’s chief data officer Jessica Rusu gave in a speech to The Alan Turing Institute’s Framework for Responsible Adoption of AI in the Financial Services Industry event.

Rusu started her speech by acknowledging the influence of the Alan Turing Institute in “advocating for positive change – for a fairer, more equitable and accessible approach to the design and deployment of technology across the UK economy”. And, she emphasized, “Regulation should not deter innovation, but rather promote fair competition, protect consumers and promote the effective functioning of markets.”

Potential of AI

Her view was that: “AI has the potential to enable firms to offer better products and services to consumers, improve operational efficiency, increase revenue, and drive innovation. All of these could lead to better outcomes for consumers, firms, financial markets, and the wider economy.” So the question was: “Is regulation necessary for the safe, responsible, and ethical use of AI? And if so, how?”

Referring to the findings of a recent survey the FCA carried out alongside the Bank of England, Machine Learning in UK Financial Services, Rusu reminded the audience that: “The findings show that there is broad agreement on the potential benefits of AI, with firms reporting enhanced data and analytic capabilities, operational efficiency, and better detection of fraud and money laundering as key positives.

“AI applications are now more advanced and embedded in day-to-day operations, with nearly 8 out of 10 in the later stages of development.”

Jessica Rusu, Chief Data, Information and Intelligence Officer, FCA

And she said: “The survey also found that the use of AI in financial services is accelerating – 72% of respondent firms reported actively using or developing AI applications, with the trend expected to triple in the next three years. Firms also reported that AI applications are now more advanced and embedded in day-to-day operations, with nearly 8 out of 10 in the later stages of development.”

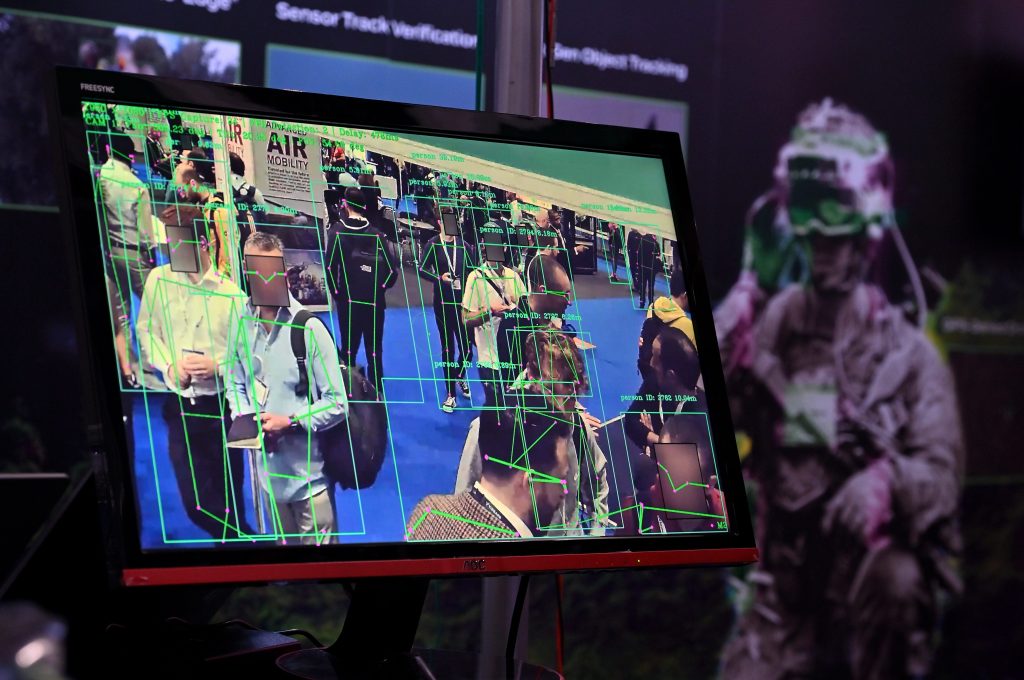

But as well as benefits, there are risks or, as Ruso put it “novel challenges for firms and regulators”. The use of AI can “amplify existing risks to consumers, the safety and soundness of firms, market integrity, and financial stability”. And, she said, data from the survey showed “data bias and data representativeness identified as the biggest risks to consumers, while a lack of AI explainability was considered the key risk for firms themselves.”

Effective governance

All of which meant that: “Effective governance and risk management is essential across the AI lifecycle, putting in place the rules, controls, and policies for a firm’s use of AI.

“Good governance is complemented by a healthy organizational culture, which helps cultivate an ethical and responsible environment at all stages of the AI lifecycle: from idea, to design, to testing and deployment, and to continuous evaluation of the model.”

Ruso said the FCA considered the Senior Managers’ and Certification Regime (SMCR) provided “the right framework to respond quickly to innovations, including AI”. Nonetheless the regulator would be publishing a Call for Interest in a Synthetic Data Expert Group, hosted by the FCA and running for two years that will;

- clarify key issues in the theory and practice of synthetic data in UK financial markets;

- identify best practice as relevant to UK financial services;

- create an established and effective framework for collaboration across industry, regulators, academia and wider civil society on issues related to synthetic data; and

- act as a sounding board on FCA projects involving synthetic data.